Welcome to the Redis documentation.

This is the multi-page printable view of this section. Click here to print.

Documentation

- 1: Introduction to Redis

- 1.1: Who's using Redis?

- 1.2: Redis open source governance

- 1.3: Redis release cycle

- 1.4: Redis sponsors

- 1.5: Redis license

- 1.6: Redis trademark guidelines

- 2: Getting started with Redis

- 2.1: Installing Redis

- 2.1.1: Install Redis on Linux

- 2.1.2: Install Redis on macOS

- 2.1.3: Install Redis on Windows

- 2.1.4: Install Redis from Source

- 2.2: Redis FAQ

- 3: Clients

- 4: Libraries

- 5: Tools

- 6: Modules

- 7: The Redis manual

- 7.1: Redis administration

- 7.2: Redis CLI

- 7.3: Client-side caching in Redis

- 7.4: Redis configuration

- 7.5: Redis data types

- 7.5.1: Data types tutorial

- 7.5.2: Redis streams

- 7.6: Key eviction

- 7.7: High availability with Redis Sentinel

- 7.8: Redis keyspace notifications

- 7.9: Redis persistence

- 7.10: Redis pipelining

- 7.11: Redis programmability

- 7.11.1: Redis functions

- 7.11.2: Scripting with Lua

- 7.11.3: Redis Lua API reference

- 7.11.4: Debugging Lua scripts in Redis

- 7.12: Redis Pub/Sub

- 7.13: Redis replication

- 7.14: Scaling with Redis Cluster

- 7.15: Redis security

- 7.16: Transactions

- 7.17: Troubleshooting Redis

- 8: Redis reference

- 8.1: ARM support

- 8.2: Redis client handling

- 8.3: Redis cluster specification

- 8.4: Redis command arguments

- 8.5: Command key specifications

- 8.6: Redis command tips

- 8.7: Debugging

- 8.8: Redis and the Gopher protocol

- 8.9: Redis internals

- 8.9.1: Event library

- 8.9.2: String internals

- 8.9.3: Virtual memory (deprecated)

- 8.9.4: Redis design draft #2 (historical)

- 8.10: Redis modules API

- 8.10.1: Modules API reference

- 8.10.2: Redis modules and blocking commands

- 8.10.3: Modules API for native types

- 8.11: Optimizing Redis

- 8.11.1: Redis benchmark

- 8.11.2: Redis CPU profiling

- 8.11.3: Diagnosing latency issues

- 8.11.4: Redis latency monitoring

- 8.11.5: Memory optimization

- 8.12: Redis programming patterns

- 8.12.1: Bulk loading

- 8.12.2: Distributed Locks with Redis

- 8.12.3: Secondary indexing

- 8.12.4: Redis patterns example

- 8.13: RESP protocol spec

- 8.14: Redis signal handling

- 8.15: Sentinel client spec

- 9: Redis Stack

- 9.1: Get started with Redis Stack

- 9.1.1: Install Redis Stack

- 9.1.1.1: Install Redis Stack with binaries

- 9.1.1.2: Run Redis Stack on Docker

- 9.1.1.3: Install Redis Stack on Linux

- 9.1.1.4: Install Redis Stack on macOS

- 9.1.2: Redis Stack clients

- 9.1.3: Redis Stack tutorials

- 9.1.3.1: Redis OM .NET

- 9.1.3.2: Redis OM for Node.js

- 9.1.3.3: Redis OM Python

- 9.1.3.4: Redis OM Spring

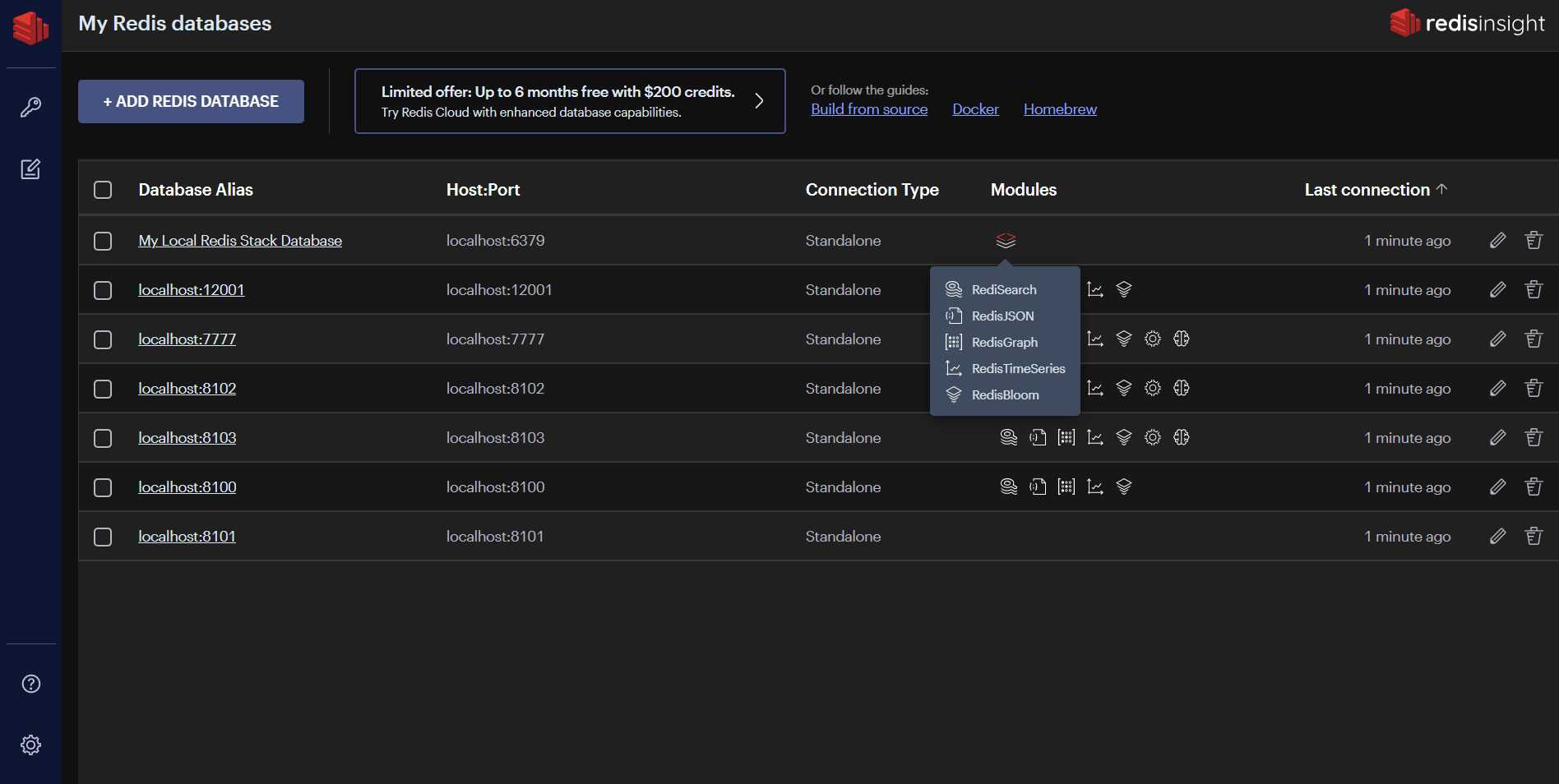

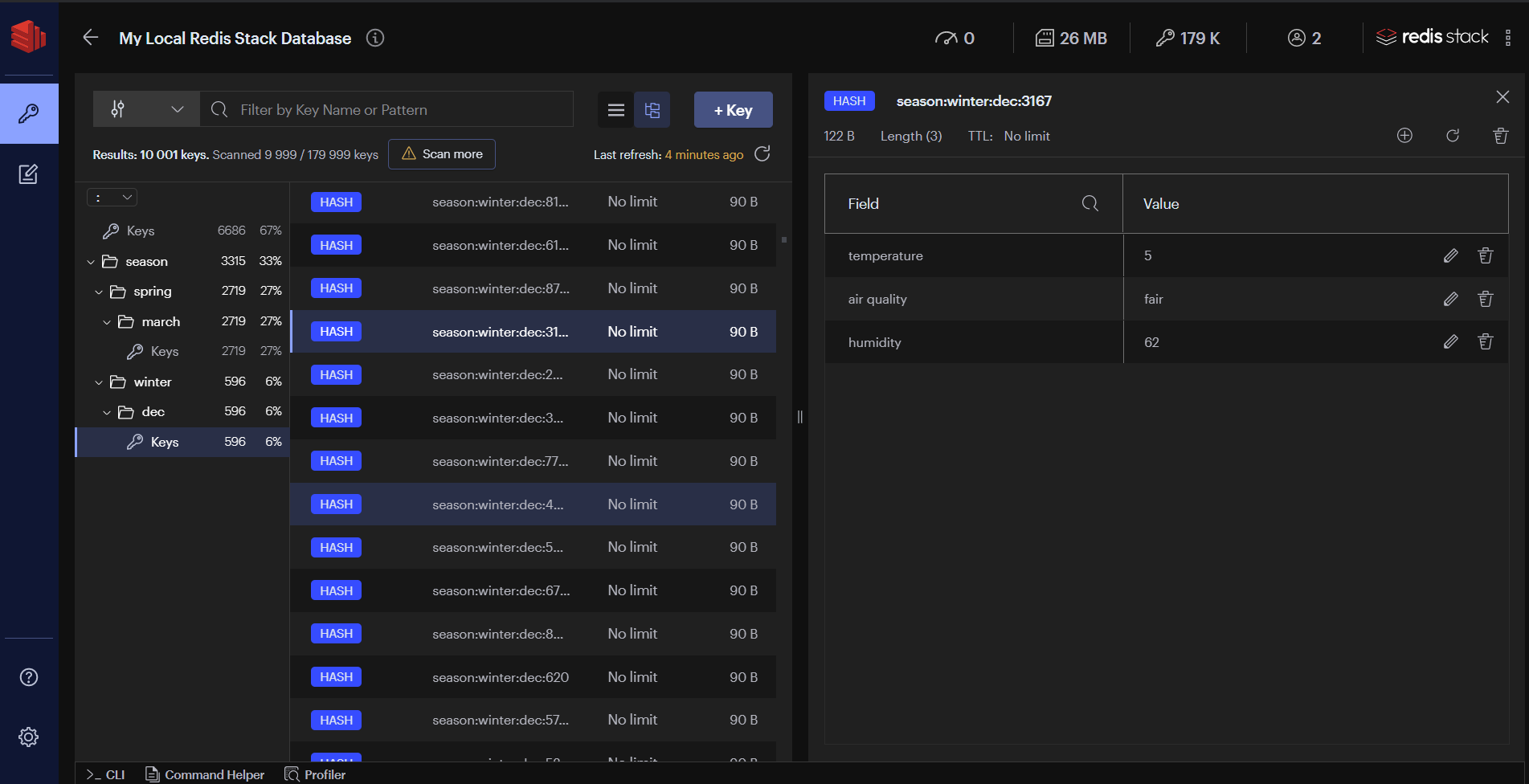

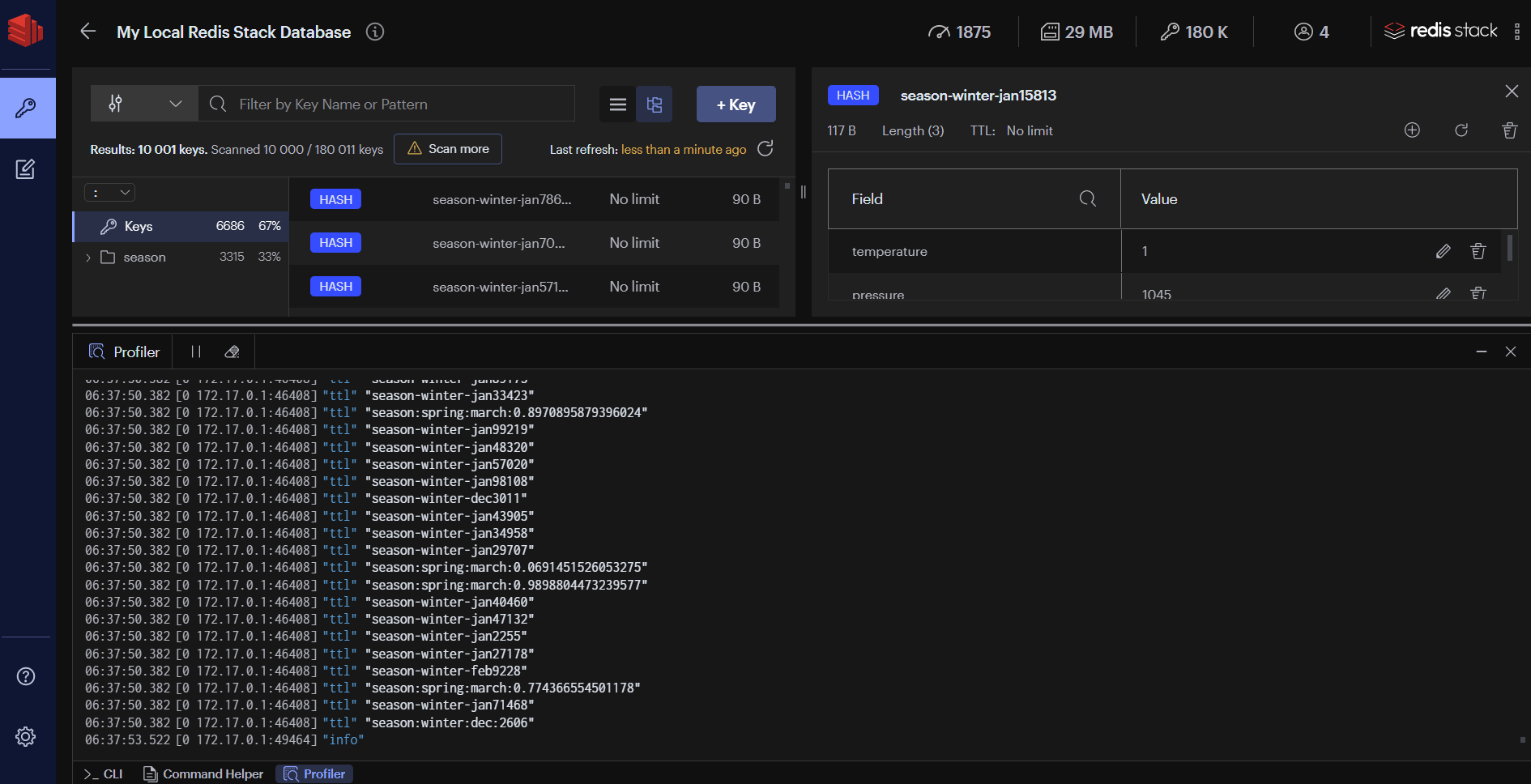

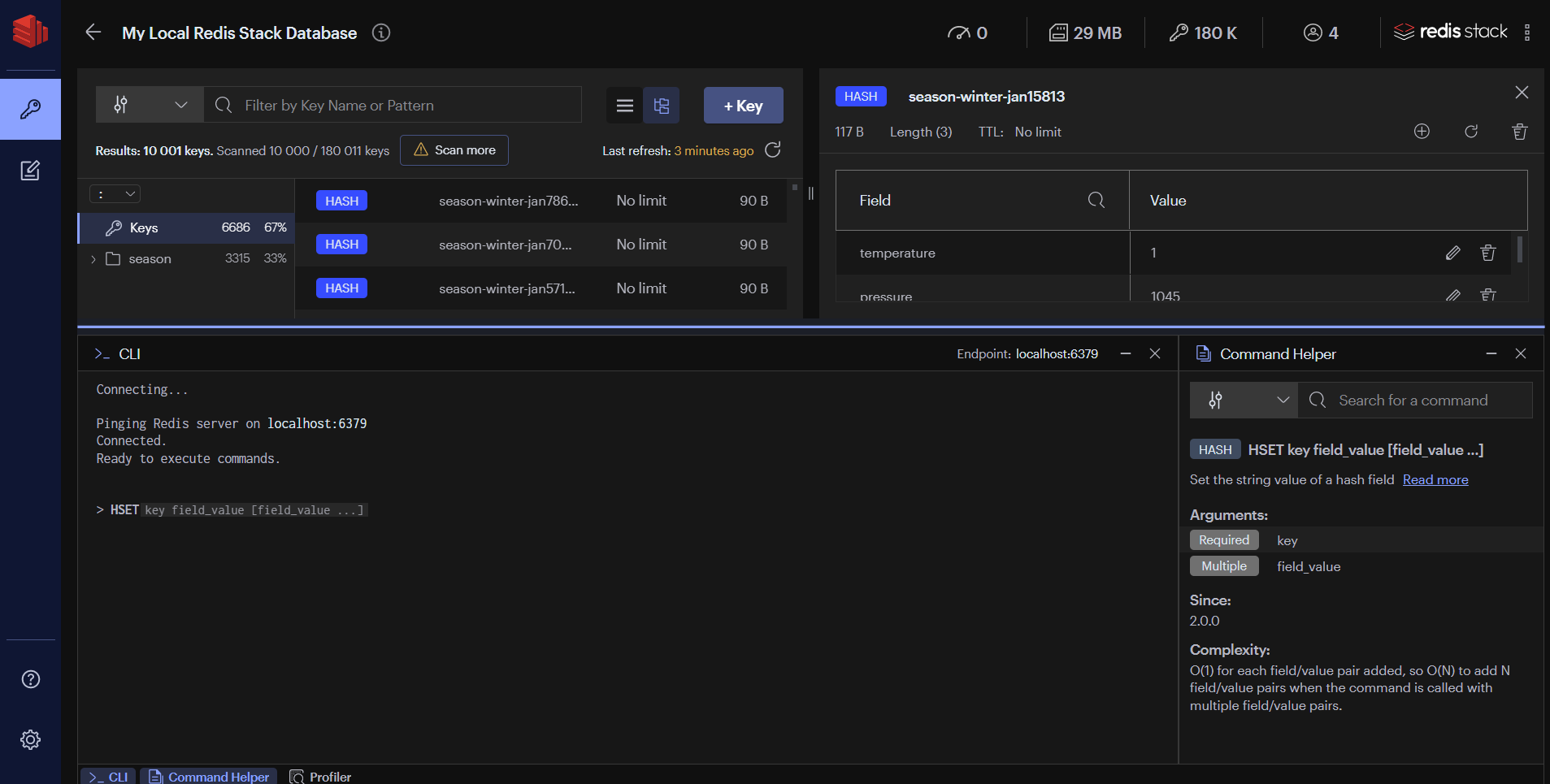

- 9.2: RedisInsight

- 9.3: RediSearch

- 9.3.1: Commands

- 9.3.2: Quick start

- 9.3.3: Configuration

- 9.3.4: Developer notes

- 9.3.5: Client Libraries

- 9.3.6: Administration Guide

- 9.3.6.1: General Administration

- 9.3.6.2: Upgrade to 2.0

- 9.3.7: Reference

- 9.3.7.1: Query syntax

- 9.3.7.2: Stop-words

- 9.3.7.3: Aggregations

- 9.3.7.4: Tokenization

- 9.3.7.5: Sorting

- 9.3.7.6: Tags

- 9.3.7.7: Highlighting

- 9.3.7.8: Scoring

- 9.3.7.9: Extensions

- 9.3.7.10: Stemming

- 9.3.7.11: Synonym

- 9.3.7.12: Payload

- 9.3.7.13: Spellchecking

- 9.3.7.14: Phonetic

- 9.3.7.15: Vector similarity

- 9.3.8: Design Documents

- 9.3.8.1: Internal design

- 9.3.8.2: Technical overview

- 9.3.8.3: Garbage collection

- 9.3.8.4: Document Indexing

- 9.3.9: Indexing JSON documents

- 9.3.10: Chinese

- 9.4: RedisJSON

- 9.4.1: Commands

- 9.4.2: Search/Indexing JSON documents

- 9.4.3: Path

- 9.4.4: Client Libraries

- 9.4.5: Performance

- 9.4.6: RedisJSON RAM Usage

- 9.4.7: Developer notes

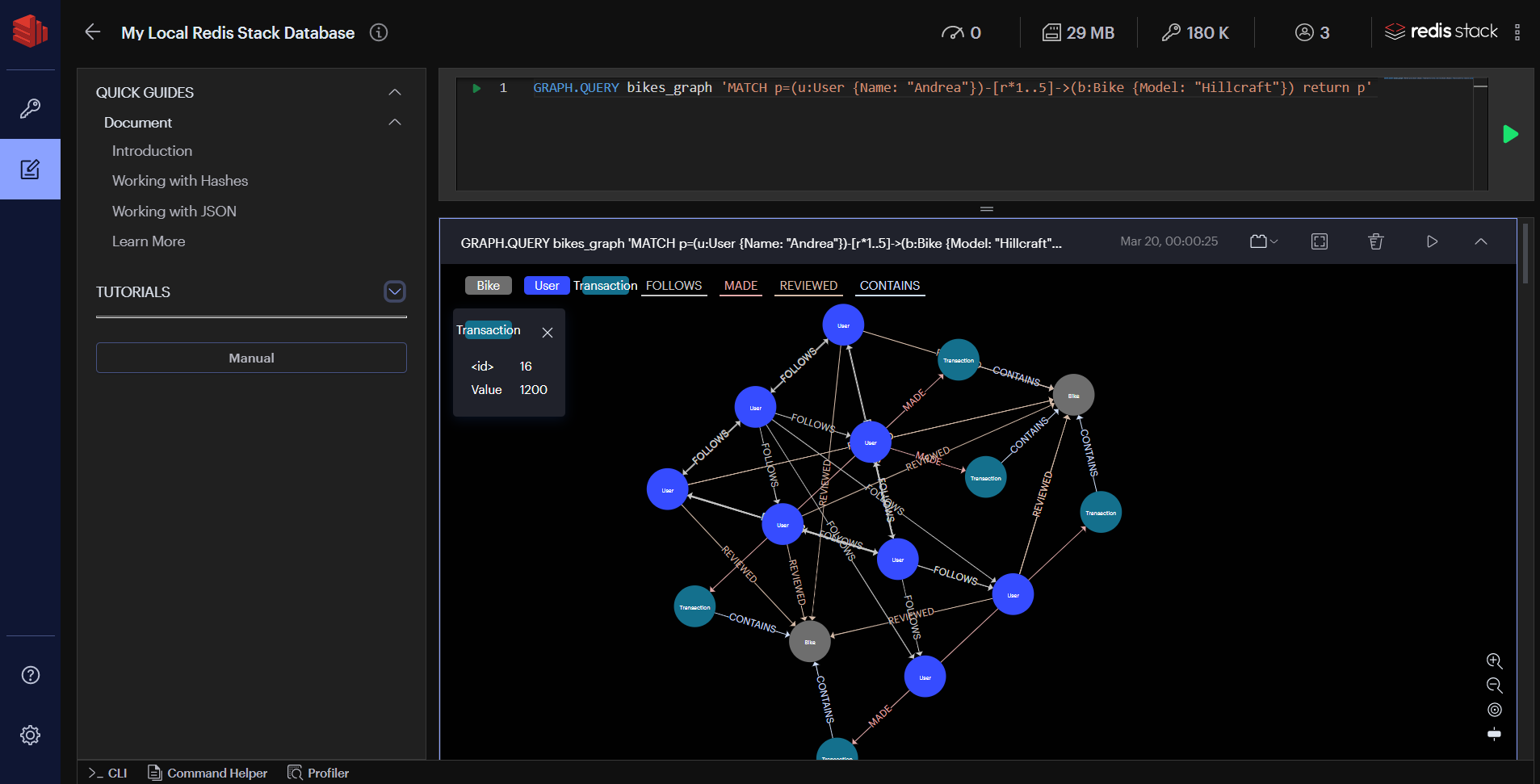

- 9.5: RedisGraph

- 9.5.1: Commands

- 9.5.2: RedisGraph Client Libraries

- 9.5.3: Run-time Configuration

- 9.5.4: RedisGraph: A High Performance In-Memory Graph Database

- 9.5.4.1: Technical specification for writing RedisGraph client libraries

- 9.5.4.2: RedisGraph Result Set Structure

- 9.5.4.3: Implementation details for the GRAPH.BULK endpoint

- 9.5.5: RedisGraph Data Types

- 9.5.6: Cypher Coverage

- 9.5.7: References

- 9.5.8: Known limitations

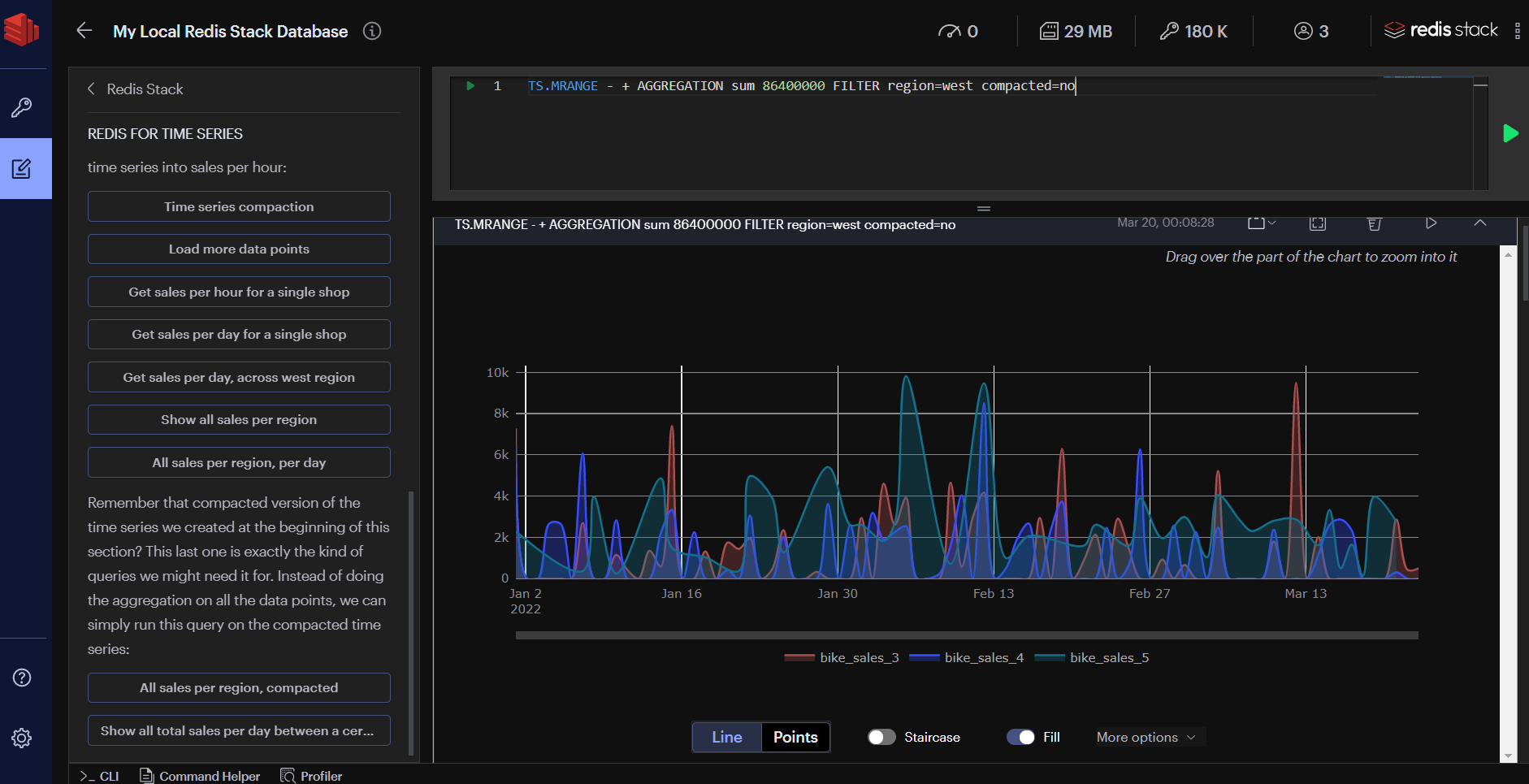

- 9.6: RedisTimeSeries

- 9.6.1: Commands

- 9.6.2: Quickstart

- 9.6.3: Configuration

- 9.6.4: Development

- 9.6.5: Clients

- 9.6.6: Reference

- 9.7: RedisBloom

- 9.7.1: Commands

- 9.7.2: Quick start

- 9.7.3: Client Libraries

- 9.7.4: Configuration

- 9.8: Redis Stack License

1 - Introduction to Redis

Redis is an open source (BSD licensed), in-memory data structure store used as a database, cache, message broker, and streaming engine. Redis provides data structures such as strings, hashes, lists, sets, sorted sets with range queries, bitmaps, hyperloglogs, geospatial indexes, and streams. Redis has built-in replication, Lua scripting, LRU eviction, transactions, and different levels of on-disk persistence, and provides high availability via Redis Sentinel and automatic partitioning with Redis Cluster.

You can run atomic operations on these types, like appending to a string; incrementing the value in a hash; pushing an element to a list; computing set intersection, union and difference; or getting the member with highest ranking in a sorted set.

To achieve top performance, Redis works with an in-memory dataset. Depending on your use case, Redis can persist your data either by periodically dumping the dataset to disk or by appending each command to a disk-based log. You can also disable persistence if you just need a feature-rich, networked, in-memory cache.

Redis supports asynchronous replication, with fast non-blocking synchronization and auto-reconnection with partial resynchronization on net split.

Redis also includes:

- Transactions

- Pub/Sub

- Lua scripting

- Keys with a limited time-to-live

- LRU eviction of keys

- Automatic failover

You can use Redis from most programming languages.

Redis is written in ANSI C and works on most POSIX systems like Linux, *BSD, and Mac OS X, without external dependencies. Linux and OS X are the two operating systems where Redis is developed and tested the most, and we recommend using Linux for deployment. Redis may work in Solaris-derived systems like SmartOS, but support is best effort. There is no official support for Windows builds.

1.1 - Who's using Redis?

A list of well known companies using Redis:

And many others! techstacks.io maintains a list of popular sites using Redis.

1.2 - Redis open source governance

From 2009-2020, Salvatore Sanfilippo built, led, and maintained the Redis open source project. During this time, Redis had no formal governance structure, operating primarily as a BDFL-style project.

As Redis grew, matured, and expanded its user base, it became increasingly important to form a sustainable structure for its ongoing development and maintenance. Salvatore and the core Redis contributors wanted to ensure the project’s continuity and reflect its larger community. With this in mind, a new governance structure was adopted.

Current governance structure

Starting on June 30, 2020, Redis adopted a light governance model that matches the current size of the project and minimizes the changes from its earlier model. The governance model is intended to be a meritocracy, aiming to empower individuals who demonstrate a long-term commitment and make significant contributions.

The Redis core team

Salvatore Sanfilippo named two successors to take over and lead the Redis project: Yossi Gottlieb (yossigo) and Oran Agra (oranagra)

With the backing and blessing of Redis Ltd., we took this opportunity to create a more open, scalable, and community-driven “core team” structure to run the project. The core team consists of members selected based on demonstrated, long-term personal involvement and contributions.

The current core team members are:

- Project Lead: Yossi Gottlieb (yossigo) from Redis Ltd.

- Project Lead: Oran Agra (oranagra) from Redis Ltd.

- Community Lead: Itamar Haber (itamarhaber) from Redis Ltd.

- Member: Zhao Zhao (soloestoy) from Alibaba

- Member: Madelyn Olson (madolson) from Amazon Web Services

The Redis core team members serve the Redis open source project and community. They are expected to set a good example of behavior, culture, and tone in accordance with the adopted Code of Conduct. They should also consider and act upon the best interests of the project and the community in a way that is free from foreign or conflicting interests.

The core team will be responsible for the Redis core project, which is the part of Redis that is hosted in the main Redis repository and is BSD licensed. It will also aim to maintain coordination and collaboration with other projects that make up the Redis ecosystem, including Redis clients, satellite projects, major middleware that relies on Redis, etc.

Roles and responsibilities

The core team has the following remit:

- Managing the core Redis code and documentation

- Managing new Redis releases

- Maintaining a high-level technical direction/roadmap

- Providing a fast response, including fixes/patches, to address security vulnerabilities and other major issues

- Project governance decisions and changes

- Coordination of Redis core with the rest of the Redis ecosystem

- Managing the membership of the core team

The core team aims to form and empower a community of contributors by further delegating tasks to individuals who demonstrate commitment, know-how, and skills. In particular, we hope to see greater community involvement in the following areas:

- Support, troubleshooting, and bug fixes of reported issues

- Triage of contributions/pull requests

Decision making

- Normal decisions will be made by core team members based on a lazy consensus approach: each member may vote +1 (positive) or -1 (negative). A negative vote must include thorough reasoning and better yet, an alternative proposal. The core team will always attempt to reach a full consensus rather than a majority. Examples of normal decisions:

- Day-to-day approval of pull requests and closing issues

- Opening new issues for discussion

- Major decisions that have a significant impact on the Redis architecture, design, or philosophy as well as core-team structure or membership changes should preferably be determined by full consensus. If the team is not able to achieve a full consensus, a majority vote is required. Examples of major decisions:

- Fundamental changes to the Redis core

- Adding a new data structure

- Creating a new version of RESP (Redis Serialization Protocol)

- Changes that affect backward compatibility

- Adding or changing core team members

- Project leads have a right to veto major decisions

Core team membership

- The core team is not expected to serve for life, however, long-term participation is desired to provide stability and consistency in the Redis programming style and the community.

- If a core-team member whose work is funded by Redis Ltd. must be replaced, the replacement will be designated by Redis Ltd. after consultation with the remaining core-team members.

- If a core-team member not funded by Redis Ltd. will no longer participate, for whatever reason, the other team members will select a replacement.

Community forums and communications

We want the Redis community to be as welcoming and inclusive as possible. To that end, we have adopted a Code of Conduct that we ask all community members to read and observe.

We encourage that all significant communications will be public, asynchronous, archived, and open for the community to actively participate in using the channels described here. The exception to that is sensitive security issues that require resolution prior to public disclosure.

To contact the core team about sensitive matters, such as misconduct or security issues, please email redis@redis.io.

New Redis repository and commits approval process

The Redis core source repository is hosted under https://github.com/redis/redis. Our target is to eventually host everything (the Redis core source and other ecosystem projects) under the Redis GitHub organization (https://github.com/redis). Commits to the Redis source repository will require code review, approval of at least one core-team member who is not the author of the commit, and no objections.

Project and development updates

Stay connected to the project and the community! For project and community updates, follow the project channels. Development announcements will be made via the Redis mailing list.

Updates to these governance rules

Any substantial changes to these rules will be treated as a major decision. Minor changes or ministerial corrections will be treated as normal decisions.

1.3 - Redis release cycle

Redis is system software and a type of system software that holds user data, so it is among the most critical pieces of a software stack.

For this reason, Redis' release cycle is such that it ensures highly-stable releases, even at the cost of slower cycles.

New releases are published in the Redis GitHub repository and are also available for download. Announcements are sent to the Redis mailing list and by @redisfeed on Twitter.

Release cycle

A given version of Redis can be at three different levels of stability:

- Unstable

- Release Candidate

- Stable

Unstable tree

The unstable version of Redis is located in the unstable branch in the

Redis GitHub repository.

This branch is the source tree where most of the new features are under

development. unstable is not considered production-ready: it may contain

critical bugs, incomplete features, and is potentially unstable.

However, we try hard to make sure that even the unstable branch is usable most of the time in a development environment without significant issues.

Release candidate

New minor and major versions of Redis begin as forks of the unstable branch.

The forked branch's name is the target release

For example, when Redis 6.0 was released as a release candidate, the unstable

branch was forked into the 6.0 branch. The new branch is the release

candidate (RC) for that version.

Bug fixes and new features that can be stabilized during the release's time

frame are committed to the unstable branch and backported to the release

candidate branch. The unstable branch may include additional work that is not

a part of the release candidate and scheduled for future releases.

The first release candidate, or RC1, is released once it can be used for development purposes and for testing the new version. At this stage, most of the new features and changes the new version brings are ready for review, and the release's purpose is collecting the public's feedback.

Subsequent release candidates are released every three weeks or so, primarily for fixing bugs. These may also add new features and introduce changes, but at a decreasing rate and decreasing potential risk towards the final release candidate.

Stable tree

Once development has ended and the frequency of critical bug reports for the release candidate wanes, it is ready for the final release. At this point, the release is marked as stable and is released with "0" as its patch-level version.

Versioning

Stable releases liberally follow the usual major.minor.patch semantic

versioning schema. The primary goal is to provide explicit guarantees regarding

backward compatibility.

Patch-Level versions

Patches primarily consist of bug fixes and very rarely introduce any compatibility issues.

Upgrading from a previous patch-level version is almost always safe and seamless.

New features and configuration directives may be added, or default values changed, as long as these don’t carry significant impacts or introduce operations-related issues.

Minor versions

Minor versions usually deliver maturity and extended functionality.

Upgrading between minor versions does not introduce any application-level compatibility issues.

Minor releases may include new commands and data types that introduce operations-related incompatibilities, including changes in data persistence format and replication protocol.

Major versions

Major versions introduce new capabilities and significant changes.

Ideally, these don't introduce application-level compatibility issues.

Release schedule

A new major version is planned for release once a year.

Generally, every major release is followed by a minor version after six months.

Patches are released as needed to fix high-urgency issues, or once a stable version accumulates enough fixes to justify it.

For contacting the core team on sensitive matters and security issues, please email redis@redis.io.

Support

As a rule, older versions are not supported as we try very hard to make the Redis API mostly backward compatible.

Upgrading to newer versions is the recommended approach and is usually trivial.

The latest stable release is always fully supported and maintained.

Two additional versions receive maintenance only, meaning that only fixes for critical bugs and major security issues are committed and released as patches:

- The previous minor version of the latest stable release.

- The previous stable major release.

For example, consider the following hypothetical versions: 1.2, 2.0, 2.2, 3.0, 3.2.

When version 2.2 is the latest stable release, both 2.0 and 1.2 are maintained.

Once version 3.0.0 replaces 2.2 as the latest stable, versions 2.0 and 2.2 are maintained, whereas version 1.x reaches its end of life.

This process repeats with version 3.2.0, after which only versions 2.2 and 3.0 are maintained.

The above are guidelines rather than rules set in stone and will not replace common sense.

1.4 - Redis sponsors

From 2015 to 2020, Salvatore Sanfilippo's work on Redis was sponsored by Redis Ltd. Since June 2020, Redis Ltd. has sponsored the governance of Redis. Redis Ltd. also sponsors the hosting and maintenance of redis.io.

Past sponsorships:

- The Shuttleworth Foundation has donated 5000 USD to the Redis project in form of a flash grant.

- From May 2013 to June 2015, the work Salvatore Sanfilippo did to develop Redis was sponsored by Pivotal.

- Before May 2013, the project was sponsored by VMware with the work of Salvatore Sanfilippo and Pieter Noordhuis.

- VMware and later Pivotal provided a 24 GB RAM workstation for Salvatore to run the Redis CI test and other long running tests. Later, Salvatore equipped the server with an SSD drive in order to test in the same hardware with rotating and flash drives.

- Linode, in January 2010, provided virtual machines for Redis testing in a virtualized environment.

- Slicehost, January 2010, provided Virtual Machines for Redis testing in a virtualized environment.

- Citrusbyte, in December 2009, contributed part of Virtual Memory implementation.

- Hitmeister, in December 2009, contributed part of Redis Cluster.

- Engine Yard, in December 2009, contributed blocking POP (BLPOP) and part of the Virtual Memory implementation.

Also thanks to the following people or organizations that donated to the Project:

- Emil Vladev

- Brad Jasper

- Mrkris

The Redis community is grateful to Redis Ltd., Pivotal, VMware and to the other companies and people who have donated to the Redis project. Thank you.

redis.io

Citrusbyte sponsored the creation of the official Redis logo (designed by Carlos Prioglio) and transferred its copyright to Salvatore Sanfilippo.

They also sponsored the initial implementation of this site by Damian Janowski and Michel Martens.

The redis.io domain was donated for a few years to the project by I Want My

Name.

1.5 - Redis license

Redis is open source software released under the terms of the three clause BSD license. Most of the Redis source code was written and is copyrighted by Salvatore Sanfilippo and Pieter Noordhuis. A list of other contributors can be found in the git history.

The Redis trademark and logo are owned by Redis Ltd. and can be used in accordance with the Redis Trademark Guidelines.

Three clause BSD license

Every file in the Redis distribution, with the exceptions of third party files specified in the list below, contain the following license:

Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

Neither the name of Redis nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

Third-party files and licenses

Redis uses source code from third parties. All this code contains a BSD or BSD-compatible license. The following is a list of third-party files and information about their copyright.

Redis uses the LHF compression library. LibLZF is copyright Marc Alexander Lehmann and is released under the terms of the two-clause BSD license.

Redis uses the

sha1.cfile that is copyright by Steve Reid and released under the public domain. This file is extremely popular and used among open source and proprietary code.When compiled on Linux Redis uses the Jemalloc allocator, that is copyright by Jason Evans, Mozilla Foundation and Facebook, Inc and is released under the two-clause BSD license.

Inside Jemalloc the file

pprofis copyright Google Inc and released under the three-clause BSD license.Inside Jemalloc the files

inttypes.h,stdbool.h,stdint.h,strings.hunder themsvc_compatdirectory are copyright Alexander Chemeris and released under the three-clause BSD license.The libraries hiredis and linenoise also included inside the Redis distribution are copyright Salvatore Sanfilippo and Pieter Noordhuis and released under the terms respectively of the three-clause BSD license and two-clause BSD license.

1.6 - Redis trademark guidelines

OPEN SOURCE LICENSE VS. TRADEMARKS. The three-clause BSD license gives you the right to redistribute and use the software in source and binary forms, with or without modification, under certain conditions. However, open source licenses like the three-clause BSD license do not address trademarks. Redis trademarks and brands need to be used in a way consistent with trademark law, and that is why we have prepared this policy – to help you understand what branding is allowed or required when using our software.

PURPOSE. To outline the policy and guidelines for using the Redis trademark (“Mark”) and logo (“Logo”).

WHY IS THIS IMPORTANT? The Mark and Logo are symbols of the quality and community support associated with the open source Redis. Trademarks protect not only its owners, but its users and the entire open source community. Our community members need to know that they can rely on the quality represented by the brand. No one should use the Mark or Logo in any way that misleads anyone, either directly or by omission, or in any way that is likely to confuse or take advantage of the community, or constitutes unfair competition. For example, you cannot say you are distributing Redis software when you are distributing a modified version of Redis open source, because people will be confused when they are not getting the same features and functionality they would get if they downloaded the software directly from Redis, or will think that the modified software is endorsed or sponsored by us or the community. You also cannot use the Mark or Logo on your website or in connection with any services in a way that suggests that your website is an official Redis website or service, or that suggests that we endorse your website or services.

PROPER USE OF THE REDIS TRADEMARKS AND LOGO. You may do any of the following:

- a. When you use an unaltered, unmodified copy of open source Redis downloaded from https://redis.io (the “Software”) as a data source for your application, you may use the Mark and Logo to identify your use. The open source Redis software combined with, or integrated into, any other software program, including but not limited to automation software for offering Redis as a cloud service or orchestration software for offering Redis in containers is considered “modified” Redis software and does not entitle you to use the Mark or the Logo, except in a case of nominative use, as described below. Integrating the Software with other software or service can introduce performance or quality control problems that can devalue the goodwill in the Redis brand and we want to be sure that such problems do not confuse users as to the quality of the product.

- b. The Software is developed by and for the Redis community. If you are engaged in community advocacy, you can use the Mark but not the Logo in the context of showing support for the open source Redis project, provided that:

- i. The Mark is used in a manner consistent with this policy;

- ii. There is no commercial purpose behind the use and you are not offering Redis commercially under the same domain name;

- iii. There is no suggestion that you are the creator or source of Redis, or that your project is approved, sponsored, or affiliated with us or the community; and

- iv. You must include attribution according to section 6.a. below.

- c. Nominative Use: Trademark law permits third parties the use of a mark to identify the trademark holder’s product or service so long as such use is not likely to cause unnecessary consumer or public confusion. This is referred to as a nominative or fair use. When you distribute, or offer an altered, modified or combined copy of the Software, such as in the case of a cloud service or a container service, you may engage in “nominative use” of the Mark, but this does not allow you to use the Logo.

- d. Examples of Nominative Use:

- i. Offering an XYZ software, which is an altered, modified or combined copy of the open source Redis software, including but not limited to offering Redis as a cloud service or as a container service, and while fully complying with the open source Redis API - you may only name it "XYZ for Redis®" or state that "XYZ software is compatible with the Redis® API". No other term or description of your software is allowed.

- ii. Offering an ABC application, which uses an altered, modified or combined copy of the open source Redis software as a data source, including but not limited to using Redis as a cloud service or a container service, and while the modified Redis fully complies with the open source Redis API - you may only state that "ABC application is using XYZ for Redis®", or "ABC application is using a software which is compatible with the Redis® API". No other term or description of your application is allowed.

- iii. If, however, the offered XYZ software, or service based thereof, or application ABC uses an altered, modified or combined copy of the open source Redis software that does not fully comply with the open source Redis API - you may not use the Mark and Logo at all.

- e. In any use (or nominative use) of the Mark or the Logo as per the above, you should comply with all the provisions of Section 6 (General Use).

IMPROPER USE OF THE REDIS TRADEMARKS AND LOGOS. Any use of the Mark or Logo other than as expressly described as permitted herein is not permitted because we believe that it would likely cause impermissible public confusion. Use of the Mark that we will likely consider infringing without permission for use include:

- a. Entity Names. You may not form a company, use a company name, or create a software product or service name that includes the Mark or implies any that such company is the source or sponsor of Redis. If you wish to form an entity for a user or developer group, please contact us and we will be glad to discuss a license for a suitable name;

- b. Class or Quality. You may not imply that you are providing a class or quality of Redis (e.g., "enterprise-class" or "commercial quality" or “fully managed”) in a way that implies Redis is not of that class, grade or quality, nor that other parties are not of that class, grade, or quality;

- c. False or Misleading Statements. You may not make false or misleading statements regarding your use of Redis (e.g., "we wrote the majority of the code" or "we are major contributors" or "we are committers");

- d. Domain Names and Subdomains. You must not use Redis or any confusingly similar phrase in a domain name or subdomain. For instance “www.Redishost.com” is not allowed. If you wish to use such a domain name for a user or developer group, please contact us and we will be glad to discuss a license for a suitable domain name. Because of the many persons who, unfortunately, seek to spoof, swindle or deceive the community by using confusing domain names, we must be very strict about this rule;

- e. Websites. You must not use our Mark or Logo on your website in a way that suggests that your website is an official website or that we endorse your website;

- f. Merchandise. You must not manufacture, sell or give away merchandise items, such as T-shirts and mugs, bearing the Mark or Logo, or create any mascot for Redis. If you wish to use the Mark or Logo for a user or developer group, please contact us and we will be glad to discuss a license to do this;

- g. Variations, takeoffs or abbreviations. You may not use a variation of the Mark for any purpose. For example, the following are not acceptable:

- i. Red;

- ii. MyRedis; and

- iii. RedisHost.

- h. Rebranding. You may not change the Mark or Logo on a redistributed (unmodified) Software to your own brand or logo. You may not hold yourself out as the source of the Redis software, except to the extent you have modified it as allowed under the three-clause BSD license, and you make it clear that you are the source only of the modification;

- i. Combination Marks. Do not use our Mark or Logo in combination with any other marks or logos. For example Foobar Redis, or the name of your company or product typeset to look like the Redis logo; and

- j. Web Tags. Do not use the Mark in a title or metatag of a web page to influence search engine rankings or result listings, rather than for discussion or advocacy of the Redis project.

GENERAL USE INFORMATION.

- a. Attribution. Any permitted use of the Mark or Logo, as indicated above, should comply with the following provisions:

- i. You should add the R mark (®) and an asterisk (

*) to the first mention of the word "Redis" as part of or in connection with a product name; - ii. Whenever "Redis®

*" is shown - add the following legend (with an asterisk) in a noticeable and readable format: "*Redis is a trademark of Redis Ltd. Any rights therein are reserved to Redis Ltd. Any use by<company XYZ>is for referential purposes only and does not indicate any sponsorship, endorsement or affiliation between Redis and<company XYZ>"; and - iii. Sections i. and ii. above apply to any appearance of the word "Redis" in: (a) any web page, gated or un-gated; (b) any marketing collateral, white paper, or other promotional material, whether printed or electronic; and (c) any advertisement, in any format.

- i. You should add the R mark (®) and an asterisk (

- b. Capitalization. Always distinguish the Mark from surrounding text with at least initial capital letters or in all capital letters, (e.g., as Redis or REDIS).

- c. Adjective. Always use the Mark as an adjective modifying a noun, such as “the Redis software.”

- d. Do not make any changes to the Logo. This means you may not add decorative elements, change the colors, change the proportions, distort it, add elements or combine it with other logos.

- a. Attribution. Any permitted use of the Mark or Logo, as indicated above, should comply with the following provisions:

NOTIFY US OF ABUSE. Do not make any changes to the Logo. This means you may not add decorative elements, change the colors, change the proportions, distort it, add elements or combine it with other logos.

MORE QUESTIONS? If you have questions about this policy, or wish to request a license for any uses that are not specifically authorized in this policy, please contact us at legal@redis.com.

2 - Getting started with Redis

This is a guide to getting started with Redis. You'll learn how to install, run, and experiment with the Redis server process.

Install Redis

How you install Redis depends on your operating system. See the guide below that best fits your needs:

Once you have Redis up and running, and can connect using redis-cli, you can continue with the steps below.

Exploring Redis with the CLI

External programs talk to Redis using a TCP socket and a Redis specific protocol. This protocol is implemented in the Redis client libraries for the different programming languages. However to make hacking with Redis simpler Redis provides a command line utility that can be used to send commands to Redis. This program is called redis-cli.

The first thing to do in order to check if Redis is working properly is sending a PING command using redis-cli:

$ redis-cli ping

PONG

Running redis-cli followed by a command name and its arguments will send this command to the Redis instance running on localhost at port 6379. You can change the host and port used by redis-cli - just try the --help option to check the usage information.

Another interesting way to run redis-cli is without arguments: the program will start in interactive mode. You can type different commands and see their replies.

$ redis-cli

redis 127.0.0.1:6379> ping

PONG

redis 127.0.0.1:6379> set mykey somevalue

OK

redis 127.0.0.1:6379> get mykey

"somevalue"

At this point you are able to talk with Redis. It is the right time to pause a bit with this tutorial and start the fifteen minutes introduction to Redis data types in order to learn a few Redis commands. Otherwise if you already know a few basic Redis commands you can keep reading.

Securing Redis

By default Redis binds to all the interfaces and has no authentication at all. If you use Redis in a very controlled environment, separated from the external internet and in general from attackers, that's fine. However if an unhardened Redis is exposed to the internet, it is a big security concern. If you are not 100% sure your environment is secured properly, please check the following steps in order to make Redis more secure, which are enlisted in order of increased security.

- Make sure the port Redis uses to listen for connections (by default 6379 and additionally 16379 if you run Redis in cluster mode, plus 26379 for Sentinel) is firewalled, so that it is not possible to contact Redis from the outside world.

- Use a configuration file where the

binddirective is set in order to guarantee that Redis listens on only the network interfaces you are using. For example only the loopback interface (127.0.0.1) if you are accessing Redis just locally from the same computer, and so forth. - Use the

requirepassoption in order to add an additional layer of security so that clients will require to authenticate using theAUTHcommand. - Use spiped or another SSL tunneling software in order to encrypt traffic between Redis servers and Redis clients if your environment requires encryption.

Note that a Redis instance exposed to the internet without any security is very simple to exploit, so make sure you understand the above and apply at least a firewall layer. After the firewall is in place, try to connect with redis-cli from an external host in order to prove yourself the instance is actually not reachable.

Using Redis from your application

Of course using Redis just from the command line interface is not enough as the goal is to use it from your application. In order to do so you need to download and install a Redis client library for your programming language. You'll find a full list of clients for different languages in this page.

For instance if you happen to use the Ruby programming language our best advice is to use the Redis-rb client. You can install it using the command gem install redis.

These instructions are Ruby specific but actually many library clients for popular languages look quite similar: you create a Redis object and execute commands calling methods. A short interactive example using Ruby:

>> require 'rubygems'

=> false

>> require 'redis'

=> true

>> r = Redis.new

=> #<Redis client v4.5.1 for redis://127.0.0.1:6379/0>

>> r.ping

=> "PONG"

>> r.set('foo','bar')

=> "OK"

>> r.get('foo')

=> "bar"

Redis persistence

You can learn how Redis persistence works on this page, however what is important to understand for a quick start is that by default, if you start Redis with the default configuration, Redis will spontaneously save the dataset only from time to time (for instance after at least five minutes if you have at least 100 changes in your data), so if you want your database to persist and be reloaded after a restart make sure to call the SAVE command manually every time you want to force a data set snapshot. Otherwise make sure to shutdown the database using the SHUTDOWN command:

$ redis-cli shutdown

This way Redis will make sure to save the data on disk before quitting. Reading the persistence page is strongly suggested in order to better understand how Redis persistence works.

Installing Redis more properly

Running Redis from the command line is fine just to hack a bit or for development. However, at some point you'll have some actual application to run on a real server. For this kind of usage you have two different choices:

- Run Redis using screen.

- Install Redis in your Linux box in a proper way using an init script, so that after a restart everything will start again properly.

A proper install using an init script is strongly suggested. The following instructions can be used to perform a proper installation using the init script shipped with Redis version 2.4 or higher in a Debian or Ubuntu based distribution.

We assume you already copied redis-server and redis-cli executables under /usr/local/bin.

Create a directory in which to store your Redis config files and your data:

sudo mkdir /etc/redis sudo mkdir /var/redisCopy the init script that you'll find in the Redis distribution under the utils directory into

/etc/init.d. We suggest calling it with the name of the port where you are running this instance of Redis. For example:sudo cp utils/redis_init_script /etc/init.d/redis_6379Edit the init script.

sudo vi /etc/init.d/redis_6379

Make sure to modify REDISPORT accordingly to the port you are using. Both the pid file path and the configuration file name depend on the port number.

Copy the template configuration file you'll find in the root directory of the Redis distribution into

/etc/redis/using the port number as name, for instance:sudo cp redis.conf /etc/redis/6379.confCreate a directory inside

/var/redisthat will work as data and working directory for this Redis instance:sudo mkdir /var/redis/6379Edit the configuration file, making sure to perform the following changes:

- Set daemonize to yes (by default it is set to no).

- Set the pidfile to

/var/run/redis_6379.pid(modify the port if needed). - Change the port accordingly. In our example it is not needed as the default port is already 6379.

- Set your preferred loglevel.

- Set the logfile to

/var/log/redis_6379.log - Set the dir to

/var/redis/6379(very important step!)

Finally add the new Redis init script to all the default runlevels using the following command:

sudo update-rc.d redis_6379 defaults

You are done! Now you can try running your instance with:

sudo /etc/init.d/redis_6379 start

Make sure that everything is working as expected:

- Try pinging your instance with redis-cli.

- Do a test save with

redis-cli saveand check that the dump file is correctly stored into/var/redis/6379/(you should find a file calleddump.rdb). - Check that your Redis instance is correctly logging in the log file.

- If it's a new machine where you can try it without problems make sure that after a reboot everything is still working.

Note: In the above instructions we skipped many Redis configuration parameters that you would like to change, for instance in order to use AOF persistence instead of RDB persistence, or to setup replication, and so forth.

Make sure to read the example redis.conf file (that is heavily commented) and the other documentation you can find in this web site for more information.

2.1 - Installing Redis

2.1.1 - Install Redis on Linux

Most major Linux distributions provide packages for Redis.

Install on Ubuntu

You can install recent stable versions of Redis from the official

packages.redis.io APT repository. Add the repository to the apt index, update it, and then install:

curl -fsSL https://packages.redis.io/gpg | sudo gpg --dearmor -o /usr/share/keyrings/redis-archive-keyring.gpg

echo "deb [signed-by=/usr/share/keyrings/redis-archive-keyring.gpg] https://packages.redis.io/deb $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/redis.list

sudo apt-get update

sudo apt-get install redisInstall from Snapcraft

The Snapcraft store provides Redis installation packages for dozens of Linux distributions. For example, here's how to install Redis on CentOS using Snapcraft:

sudo yum install epel-release

sudo yum install snapd

sudo systemctl enable --now snapd.socket

sudo ln -s /var/lib/snapd/snap /snap

sudo snap install redis2.1.2 - Install Redis on macOS

This guide shows you how to install Redis on macOS using Homebrew. Homebrew is the easiest way to install Redis on macOS. If you'd prefer to build Redis from the source files on macOS, see [Installing Redis from Source].

Prerequisites

First, make sure you have Homebrew installed. From the terminal, run:

$ brew --versionIf this command fails, you'll need to follow the Homebrew installation instructions.

Installation

From the terminal, run:

brew install redisThis will install Redis on your system.

Starting and stopping Redis in the foreground

To test your Redis installation, you can run the redis-server executable from the command line:

redis-serverIf successful, you'll see the startup logs for Redis, and Redis will be running in the foreground.

To stop Redis, enter Ctrl-C.

Starting and stopping Redis using launchd

As an alternative to running Redis in the foreground, you can also use launchd to start the process in the background:

brew services start redisThis launch Redis and restart it at login. You can check the status of a launchd managed Redis by running the following:

brew services info redisIf the service is running, you'll see output like the following:

redis (homebrew.mxcl.redis)

Running: ✔

Loaded: ✔

User: miranda

PID: 67975To stop the service, run:

brew services stop redisConnect to Redis

Once Redis is running, you can test it by running redis-cli:

redis-cliThis will open the Redis REPL. Try running some commands:

127.0.0.1:6379> lpush demos redis-macOS-demo

OK

127.0.0.1:6379> rpop demos

"redis-macOS-demo"Next steps

Once you have a running Redis instance, you may want to:

- Try the Redis CLI tutorial

- Connect using one of the Redis clients

2.1.3 - Install Redis on Windows

Redis is not officially supported on Windows. However, you can install install Redis on Windows for development by the following the instructions below.

To install Redis on Windows, you'll first need to enable WSL2 (Windows Subsystem for Linux). WSL2 lets you run Linux binaries natively on Windows. For this method to work, you'll need to be running Windows 10 version 2004 and higher or Windows 11.

Install or enable WSL2

Microsoft provides detailed instructions for installing WSL. Follow these instructions, and take note of the default Linux distribution it installs. This guide assumes Ubuntu.

Install Redis

Once you're running Ubuntu on Windows, you can install Redis using apt-get:

sudo apt-add-repository ppa:redislabs/redis

sudo apt-get update

sudo apt-get upgrade

sudo apt-get install redis-serverThen start the Redis server like so:

sudo service redis-server startConnect to Redis

You can test that your Redis server is running by connecting with the Redis CLI:

redis-cli

127.0.0.1:6379> ping

PONG2.1.4 - Install Redis from Source

You can compile and install Redis from source on variety of platforms and operating systems including Linux and macOS. Redis has no dependencies other than a C compiler and libc.

Downloading the source files

The Redis source files are available on [this site's Download page]. You can verify the integrity of these downloads by checking them against the digests in the redis-hashes git repository.

To obtain the source files for the latest stable version of Redis from the Redis downloads site, run:

wget https://download.redis.io/redis-stable.tar.gzCompiling Redis

To compile Redis, first the tarball, change to the root directory, and then run make:

tar -xzvf redis-stable.tar.gz

cd redis-stable

makeIf the compile succeeds, you'll find several Redis binaries in the src directory, including:

- redis-server: the Redis Server itself

- redis-cli is the command line interface utility to talk with Redis.

To install these binaries in /usr/local/bin, run:

make installStarting and stopping Redis in the foreground

Once installed, you can start Redis by running

redis-serverIf successful, you'll see the startup logs for Redis, and Redis will be running in the foreground.

To stop Redis, enter Ctrl-C.

2.2 - Redis FAQ

How is Redis different from other key-value stores?

- Redis has a different evolution path in the key-value DBs where values can contain more complex data types, with atomic operations defined on those data types. Redis data types are closely related to fundamental data structures and are exposed to the programmer as such, without additional abstraction layers.

- Redis is an in-memory but persistent on disk database, so it represents a different trade off where very high write and read speed is achieved with the limitation of data sets that can't be larger than memory. Another advantage of in-memory databases is that the memory representation of complex data structures is much simpler to manipulate compared to the same data structures on disk, so Redis can do a lot with little internal complexity. At the same time the two on-disk storage formats (RDB and AOF) don't need to be suitable for random access, so they are compact and always generated in an append-only fashion (Even the AOF log rotation is an append-only operation, since the new version is generated from the copy of data in memory). However this design also involves different challenges compared to traditional on-disk stores. Being the main data representation on memory, Redis operations must be carefully handled to make sure there is always an updated version of the data set on disk.

What's the Redis memory footprint?

To give you a few examples (all obtained using 64-bit instances):

- An empty instance uses ~ 3MB of memory.

- 1 Million small Keys -> String Value pairs use ~ 85MB of memory.

- 1 Million Keys -> Hash value, representing an object with 5 fields, use ~ 160 MB of memory.

Testing your use case is trivial. Use the redis-benchmark utility to generate random data sets then check the space used with the INFO memory command.

64-bit systems will use considerably more memory than 32-bit systems to store the same keys, especially if the keys and values are small. This is because pointers take 8 bytes in 64-bit systems. But of course the advantage is that you can have a lot of memory in 64-bit systems, so in order to run large Redis servers a 64-bit system is more or less required. The alternative is sharding.

Why does Redis keep its entire dataset in memory?

In the past the Redis developers experimented with Virtual Memory and other systems in order to allow larger than RAM datasets, but after all we are very happy if we can do one thing well: data served from memory, disk used for storage. So for now there are no plans to create an on disk backend for Redis. Most of what Redis is, after all, a direct result of its current design.

If your real problem is not the total RAM needed, but the fact that you need to split your data set into multiple Redis instances, please read the partitioning page in this documentation for more info.

Redis Ltd., the company sponsoring Redis development, has developed a "Redis on Flash" solution that uses a mixed RAM/flash approach for larger data sets with a biased access pattern. You may check their offering for more information, however this feature is not part of the open source Redis code base.

Can you use Redis with a disk-based database?

Yes, a common design pattern involves taking very write-heavy small data in Redis (and data you need the Redis data structures to model your problem in an efficient way), and big blobs of data into an SQL or eventually consistent on-disk database. Similarly sometimes Redis is used in order to take in memory another copy of a subset of the same data stored in the on-disk database. This may look similar to caching, but actually is a more advanced model since normally the Redis dataset is updated together with the on-disk DB dataset, and not refreshed on cache misses.

How can I reduce Redis' overall memory usage?

If you can, use Redis 32 bit instances. Also make good use of small hashes, lists, sorted sets, and sets of integers, since Redis is able to represent those data types in the special case of a few elements in a much more compact way. There is more info in the Memory Optimization page.

What happens if Redis runs out of memory?

Redis has built-in protections allowing the users to set a max limit on memory

usage, using the maxmemory option in the configuration file to put a limit

to the memory Redis can use. If this limit is reached, Redis will start to reply

with an error to write commands (but will continue to accept read-only

commands).

You can also configure Redis to evict keys when the max memory limit is reached. See the [eviction policy docs] for more information on this.

Background saving fails with a fork() error on Linux?

Short answer: echo 1 > /proc/sys/vm/overcommit_memory :)

And now the long one:

The Redis background saving schema relies on the copy-on-write semantic of the fork system call in

modern operating systems: Redis forks (creates a child process) that is an

exact copy of the parent. The child process dumps the DB on disk and finally

exits. In theory the child should use as much memory as the parent being a

copy, but actually thanks to the copy-on-write semantic implemented by most

modern operating systems the parent and child process will share the common

memory pages. A page will be duplicated only when it changes in the child or in

the parent. Since in theory all the pages may change while the child process is

saving, Linux can't tell in advance how much memory the child will take, so if

the overcommit_memory setting is set to zero the fork will fail unless there is

as much free RAM as required to really duplicate all the parent memory pages.

If you have a Redis dataset of 3 GB and just 2 GB of free

memory it will fail.

Setting overcommit_memory to 1 tells Linux to relax and perform the fork in a

more optimistic allocation fashion, and this is indeed what you want for Redis.

A good source to understand how Linux Virtual Memory works and other

alternatives for overcommit_memory and overcommit_ratio is this classic article

from Red Hat Magazine, "Understanding Virtual Memory".

You can also refer to the proc(5) man page for explanations of the

available values.

Are Redis on-disk snapshots atomic?

Yes, the Redis background saving process is always forked when the server is outside of the execution of a command, so every command reported to be atomic in RAM is also atomic from the point of view of the disk snapshot.

How can Redis use multiple CPUs or cores?

It's not very frequent that CPU becomes your bottleneck with Redis, as usually Redis is either memory or network bound. For instance, when using pipelining a Redis instance running on an average Linux system can deliver 1 million requests per second, so if your application mainly uses O(N) or O(log(N)) commands, it is hardly going to use too much CPU.

However, to maximize CPU usage you can start multiple instances of Redis in the same box and treat them as different servers. At some point a single box may not be enough anyway, so if you want to use multiple CPUs you can start thinking of some way to shard earlier.

You can find more information about using multiple Redis instances in the Partitioning page.

As of version 4.0, Redis has started implementing threaded actions. For now this is limited to deleting objects in the background and blocking commands implemented via Redis modules. For subsequent releases, the plan is to make Redis more and more threaded.

What is the maximum number of keys a single Redis instance can hold? What is the maximum number of elements in a Hash, List, Set, and Sorted Set?

Redis can handle up to 2^32 keys, and was tested in practice to handle at least 250 million keys per instance.

Every hash, list, set, and sorted set, can hold 2^32 elements.

In other words your limit is likely the available memory in your system.

Why does my replica have a different number of keys its master instance?

If you use keys with limited time to live (Redis expires) this is normal behavior. This is what happens:

- The primary generates an RDB file on the first synchronization with the replica.

- The RDB file will not include keys already expired in the primary but which are still in memory.

- These keys are still in the memory of the Redis primary, even if logically expired. They'll be considered non-existent, and their memory will be reclaimed later, either incrementally or explicitly on access. While these keys are not logically part of the dataset, they are accounted for in the

INFOoutput and in theDBSIZEcommand. - When the replica reads the RDB file generated by the primary, this set of keys will not be loaded.

Because of this, it's common for users with many expired keys to see fewer keys in the replicas. However, logically, the primary and replica will have the same content.

Where does the name "Redis" come from?

Redis is an acronym that stands for REmote DIctionary Server.

Why did Salvatore Sanfilippo start the Redis project?

Salvatore originally created Redis to scale LLOOGG, a real-time log analysis tool. But after getting the basic Redis server working, he decided to share the work with other people and turn Redis into an open source project.

How is Redis pronounced?

"Redis" (/ˈrɛd-ɪs/) is pronounced like the word "red" plus the word "kiss" without the "k".

3 - Clients

4 - Libraries

5 - Tools

6 - Modules

7 - The Redis manual

7.1 - Redis administration

Redis setup hints

We suggest deploying Redis using the Linux operating system. Redis is also tested heavily on OS X, and tested from time to time on FreeBSD and OpenBSD systems. However Linux is where we do all the major stress testing, and where most production deployments are running.

Make sure to set the Linux kernel overcommit memory setting to 1. Add

vm.overcommit_memory = 1to/etc/sysctl.conf. Then reboot or run the commandsysctl vm.overcommit_memory=1for this to take effect immediately.Make sure Redis won't be affected by the Linux kernel feature, transparent huge pages, otherwise it will impact greatly both memory usage and latency in a negative way. This is accomplished with the following command:

echo madvise > /sys/kernel/mm/transparent_hugepage/enabled.Make sure to setup swap in your system (we suggest as much as swap as memory). If Linux does not have swap and your Redis instance accidentally consumes too much memory, either Redis will crash when it is out of memory, or the Linux kernel OOM killer will kill the Redis process. When swapping is enabled Redis will work in poorly, but you'll likely notice the latency spikes and do something before it's too late.

Set an explicit

maxmemoryoption limit in your instance to make sure that it will report errors instead of failing when the system memory limit is near to be reached. Note thatmaxmemoryshould be set by calculating the overhead for Redis, other than data, and the fragmentation overhead. So if you think you have 10 GB of free memory, set it to 8 or 9.+ If you are using Redis in a very write-heavy application, while saving an RDB file on disk or rewriting the AOF log, Redis may use up to 2 times the memory normally used. The additional memory used is proportional to the number of memory pages modified by writes during the saving process, so it is often proportional to the number of keys (or aggregate types items) touched during this time. Make sure to size your memory accordingly.Use

daemonize nowhen running under daemontools.Make sure to setup some non-trivial replication backlog, which must be set in proportion to the amount of memory Redis is using. In a 20 GB instance it does not make sense to have just 1 MB of backlog. The backlog will allow replicas to sync with the master instance much more easily.

If you use replication, Redis will need to perform RDB saves even if you have persistence disabled (this doesn't apply to diskless replication). If you don't have disk usage on the master, make sure to enable diskless replication.

If you are using replication, make sure that either your master has persistence enabled, or that it does not automatically restart on crashes. Replicas will try to maintain an exact copy of the master, so if a master restarts with an empty data set, replicas will be wiped as well.

By default, Redis does not require any authentication and listens to all the network interfaces. This is a big security issue if you leave Redis exposed on the internet or other places where attackers can reach it. See for example this attack to see how dangerous it can be. Please check our security page and the quick start for information about how to secure Redis.

See the

LATENCY DOCTORandMEMORY DOCTORcommands to assist in troubleshooting.

Running Redis on EC2

- Use HVM based instances, not PV based instances.

- Don't use old instances families, for example: use m3.medium with HVM instead of m1.medium with PV.

- The use of Redis persistence with EC2 EBS volumes needs to be handled with care since sometimes EBS volumes have high latency characteristics.

- You may want to try the new diskless replication if you have issues when replicas are synchronizing with the master.

Upgrading or restarting a Redis instance without downtime

Redis is designed to be a very long running process in your server.

Many configuration options can be modified without any kind of restart using the CONFIG SET command.

You can also switch from AOF to RDB snapshots persistence, or the other way around, without restarting Redis. Check the output of the CONFIG GET * command for more information.

However from time to time, a restart is mandatory. For example, in order to upgrade the Redis process to a newer version, or when you need to modify some configuration parameter that is currently not supported by the CONFIG command.

The following steps provide a way that is commonly used to avoid any downtime.

Setup your new Redis instance as a replica for your current Redis instance. In order to do so, you need a different server, or a server that has enough RAM to keep two instances of Redis running at the same time.

If you use a single server, make sure that the replica is started in a different port than the master instance, otherwise the replica will not be able to start at all.

Wait for the replication initial synchronization to complete (check the replica's log file).

Using

INFO, make sure the master and replica have the same number of keys. Useredis-clito make sure the replica is working as you wish and is replying to your commands.Allow writes to the replica using CONFIG SET slave-read-only no.

Configure all your clients to use the new instance (the replica). Note that you may want to use the

CLIENT PAUSEcommand to make sure that no client can write to the old master during the switch.Once you are sure that the master is no longer receiving any query (you can check this with the MONITOR command), elect the replica to master using the REPLICAOF NO ONE command, and then shut down your master.

If you are using Redis Sentinel or Redis Cluster, the simplest way to upgrade to newer versions is to upgrade one replica after the other. Then you can perform a manual failover to promote one of the upgraded replicas to master, and finally promote the last replica.

Note that Redis Cluster 4.0 is not compatible with Redis Cluster 3.2 at cluster bus protocol level, so a mass restart is needed in this case. However Redis 5 cluster bus is backward compatible with Redis 4.

7.2 - Redis CLI

The redis-cli (Redis command line interface) is a terminal program used to send commands to and read replies from the Redis server. It has two main modes: an interactive REPL (Read Eval Print Loop) mode where the user types Redis commands and receives replies, and a command mode where redis-cli is executed with additional arguments and the reply is printed to the standard output.

In interactive mode, redis-cli has basic line editing capabilities to provide a familiar tyPING experience.

There are several options you can use to launch the program in special modes. You can simulate a replica and print the replication stream it receives from the primary, check the latency of a Redis server and display statistics, or request ASCII-art spectrogram of latency samples and frequencies, among many other things.

This guide will cover the different aspects of redis-cli, starting from the simplest and ending with the more advanced features.

Command line usage

To run a Redis command and receive its reply as standard output to the terminal, include the command to execute as separate arguments of redis-cli:

$ redis-cli INCR mycounter

(integer) 7

The reply of the command is "7". Since Redis replies are typed (strings, arrays, integers, nil, errors, etc.), you see the type of the reply between parentheses. This additional information may not be ideal when the output of redis-cli must be used as input of another command or redirected into a file.

redis-cli only shows additional information for human readibility when it detects the standard output is a tty, or terminal. For all other outputs it will auto-enable the raw output mode, as in the following example:

$ redis-cli INCR mycounter > /tmp/output.txt

$ cat /tmp/output.txt

8

Notice that (integer) was omitted from the output since redis-cli detected

the output was no longer written to the terminal. You can force raw output

even on the terminal with the --raw option:

$ redis-cli --raw INCR mycounter

9

You can force human readable output when writing to a file or in

pipe to other commands by using --no-raw.

Host, port, password and database

By default redis-cli connects to the server at the address 127.0.0.1 with port 6379.

You can change this using several command line options. To specify a different host name or an IP address, use the -h option. In order to set a different port, use -p.

$ redis-cli -h redis15.localnet.org -p 6390 PING

PONG

If your instance is password protected, the -a <password> option will

preform authentication saving the need of explicitly using the AUTH command:

$ redis-cli -a myUnguessablePazzzzzword123 PING

PONG

For safety it is strongly advised to provide the password to redis-cli automatically via the

REDISCLI_AUTH environment variable.

Finally, it's possible to send a command that operates on a database number

other than the default number zero by using the -n <dbnum> option:

$ redis-cli FLUSHALL

OK

$ redis-cli -n 1 INCR a

(integer) 1

$ redis-cli -n 1 INCR a

(integer) 2

$ redis-cli -n 2 INCR a

(integer) 1

Some or all of this information can also be provided by using the -u <uri>

option and the URI pattern redis://user:password@host:port/dbnum:

$ redis-cli -u redis://LJenkins:p%40ssw0rd@redis-16379.hosted.com:16379/0 PING

PONG

SSL/TLS

By default, redis-cli uses a plain TCP connection to connect to Redis.

You may enable SSL/TLS using the --tls option, along with --cacert or

--cacertdir to configure a trusted root certificate bundle or directory.

If the target server requires authentication using a client side certificate,

you can specify a certificate and a corresponding private key using --cert and

--key.

Getting input from other programs

There are two ways you can use redis-cli in order to receive input from other

commands via the standard input. One is to use the target payload as the last argument

from stdin. For example, in order to set the Redis key net_services

to the content of the file /etc/services from a local file system, use the -x

option:

$ redis-cli -x SET net_services < /etc/services

OK

$ redis-cli GETRANGE net_services 0 50

"#\n# Network services, Internet style\n#\n# Note that "

In the first line of the above session, redis-cli was executed with the -x option and a file was redirected to the CLI's

standard input as the value to satisfy the SET net_services command phrase. This is useful for scripting.

A different approach is to feed redis-cli a sequence of commands written in a

text file:

$ cat /tmp/commands.txt

SET item:3374 100

INCR item:3374

APPEND item:3374 xxx

GET item:3374

$ cat /tmp/commands.txt | redis-cli

OK

(integer) 101

(integer) 6

"101xxx"

All the commands in commands.txt are executed consecutively by

redis-cli as if they were typed by the user in interactive mode. Strings can be

quoted inside the file if needed, so that it's possible to have single

arguments with spaces, newlines, or other special characters:

$ cat /tmp/commands.txt

SET arg_example "This is a single argument"

STRLEN arg_example

$ cat /tmp/commands.txt | redis-cli

OK

(integer) 25

Continuously run the same command

It is possible to execute a signle command a specified number of times

with a user-selected pause between executions. This is useful in

different contexts - for example when we want to continuously monitor some

key content or INFO field output, or when we want to simulate some

recurring write event, such as pushing a new item into a list every 5 seconds.

This feature is controlled by two options: -r <count> and -i <delay>.

The -r option states how many times to run a command and -i sets

the delay between the different command calls in seconds (with the ability

to specify values such as 0.1 to represent 100 milliseconds).

By default the interval (or delay) is set to 0, so commands are just executed ASAP:

$ redis-cli -r 5 INCR counter_value

(integer) 1

(integer) 2

(integer) 3

(integer) 4

(integer) 5

To run the same command indefinitely, use -1 as the count value.

To monitor over time the RSS memory size it's possible to use the following command:

$ redis-cli -r -1 -i 1 INFO | grep rss_human

used_memory_rss_human:2.71M

used_memory_rss_human:2.73M

used_memory_rss_human:2.73M

used_memory_rss_human:2.73M

... a new line will be printed each second ...

Mass insertion of data using redis-cli

Mass insertion using redis-cli is covered in a separate page as it is a

worthwhile topic itself. Please refer to our mass insertion guide.

CSV output

A CSV (Comma Separated Values) output feature exists within redis-cli to export data from Redis to an external program.

$ redis-cli LPUSH mylist a b c d

(integer) 4

$ redis-cli --csv LRANGE mylist 0 -1

"d","c","b","a"

Note that the --csv flag will only work on a single command, not the entirety of a DB as an export.

Running Lua scripts

The redis-cli has extensive support for using the debugging facility

of Lua scripting, available with Redis 3.2 onwards. For this feature, refer to the Redis Lua debugger documentation.

Even without using the debugger, redis-cli can be used to

run scripts from a file as an argument:

$ cat /tmp/script.lua

return redis.call('SET',KEYS[1],ARGV[1])

$ redis-cli --eval /tmp/script.lua location:hastings:temp , 23

OK

The Redis EVAL command takes the list of keys the script uses, and the

other non key arguments, as different arrays. When calling EVAL you

provide the number of keys as a number.

When calling redis-cli with the --eval option above, there is no need to specify the number of keys

explicitly. Instead it uses the convention of separating keys and arguments

with a comma. This is why in the above call you see location:hastings:temp , 23 as arguments.

So location:hastings:temp will populate the KEYS array, and 23 the ARGV array.

The --eval option is useful when writing simple scripts. For more

complex work, the Lua debugger is recommended. It is possible to mix the two approaches, since the debugger can also execute scripts from an external file.

Interactive mode

We have explored how to use the Redis CLI as a command line program.

This is useful for scripts and certain types of testing, however most

people will spend the majority of time in redis-cli using its interactive

mode.

In interactive mode the user types Redis commands at the prompt. The command is sent to the server, processed, and the reply is parsed back and rendered into a simpler form to read.

Nothing special is needed for running the redis-cliin interactive mode -

just execute it without any arguments

$ redis-cli

127.0.0.1:6379> PING

PONG

The string 127.0.0.1:6379> is the prompt. It displays the connected Redis server instance's hostname and port.

The prompt updates as the connected server changes or when operating on a database different from the database number zero:

127.0.0.1:6379> SELECT 2

OK

127.0.0.1:6379[2]> DBSIZE

(integer) 1

127.0.0.1:6379[2]> SELECT 0

OK

127.0.0.1:6379> DBSIZE

(integer) 503

Handling connections and reconnections

Using the CONNECT command in interactive mode makes it possible to connect

to a different instance, by specifying the hostname and port we want

to connect to:

127.0.0.1:6379> CONNECT metal 6379

metal:6379> PING

PONG

As you can see the prompt changes accordingly when connecting to a different server instance.

If a connection is attempted to an instance that is unreachable, the redis-cli goes into disconnected

mode and attempts to reconnect with each new command:

127.0.0.1:6379> CONNECT 127.0.0.1 9999

Could not connect to Redis at 127.0.0.1:9999: Connection refused

not connected> PING

Could not connect to Redis at 127.0.0.1:9999: Connection refused

not connected> PING

Could not connect to Redis at 127.0.0.1:9999: Connection refused

Generally after a disconnection is detected, redis-cli always attempts to

reconnect transparently; if the attempt fails, it shows the error and

enters the disconnected state. The following is an example of disconnection

and reconnection:

127.0.0.1:6379> INFO SERVER

Could not connect to Redis at 127.0.0.1:6379: Connection refused

not connected> PING

PONG

127.0.0.1:6379>

(now we are connected again)

When a reconnection is performed, redis-cli automatically re-selects the

last database number selected. However, all other states about the

connection is lost, such as within a MULTI/EXEC transaction:

$ redis-cli

127.0.0.1:6379> MULTI

OK

127.0.0.1:6379> PING

QUEUED

( here the server is manually restarted )

127.0.0.1:6379> EXEC

(error) ERR EXEC without MULTI

This is usually not an issue when using the redis-cli in interactive mode for

testing, but this limitation should be known.

Editing, history, completion and hints

Because redis-cli uses the

linenoise line editing library, it

always has line editing capabilities, without depending on libreadline or

other optional libraries.

Command execution history can be accessed in order to avoid retyping commands by pressing the arrow keys (up and down).

The history is preserved between restarts of the CLI, in a file named

.rediscli_history inside the user home directory, as specified

by the HOME environment variable. It is possible to use a different

history filename by setting the REDISCLI_HISTFILE environment variable,

and disable it by setting it to /dev/null.

The redis-cli is also able to perform command-name completion by pressing the TAB

key, as in the following example:

127.0.0.1:6379> Z<TAB>

127.0.0.1:6379> ZADD<TAB>

127.0.0.1:6379> ZCARD<TAB>

Once Redis command name has been entered at the prompt, the redis-cli will display

syntax hints. Like command history, this behavior can be turned on and off via the redis-cli preferences.

Preferences

There are two ways to customize redis-cli behavior. The file .redisclirc

in the home directory is loaded by the CLI on startup. You can override the

file's default location by setting the REDISCLI_RCFILE environment variable to

an alternative path. Preferences can also be set during a CLI session, in which

case they will last only the duration of the session.

To set preferences, use the special :set command. The following preferences

can be set, either by typing the command in the CLI or adding it to the

.redisclirc file:

:set hints- enables syntax hints:set nohints- disables syntax hints

Running the same command N times

It is possible to run the same command multiple times in interactive mode by prefixing the command name by a number:

127.0.0.1:6379> 5 INCR mycounter

(integer) 1

(integer) 2

(integer) 3

(integer) 4

(integer) 5

Showing help about Redis commands

redis-cli provides online help for most Redis commands, using the HELP command. The command can be used

in two forms:

HELP @<category>shows all the commands about a given category. The categories are:@generic@string@list@set@sorted_set@hash@pubsub@transactions@connection@server@scripting@hyperloglog@cluster@geo@stream

HELP <commandname>shows specific help for the command given as argument.

For example in order to show help for the PFADD command, use:

127.0.0.1:6379> HELP PFADD

PFADD key element [element ...]

summary: Adds the specified elements to the specified HyperLogLog.

since: 2.8.9

Note that HELP supports TAB completion as well.

Clearing the terminal screen

Using the CLEAR command in interactive mode clears the terminal's screen.

Special modes of operation

So far we saw two main modes of redis-cli.

- Command line execution of Redis commands.

- Interactive "REPL" usage.

The CLI performs other auxiliary tasks related to Redis that are explained in the next sections: