Redis Stack

Extend Redis with modern data models and processing engines.

Redis Stack is an extension of Redis that adds modern data models and processing engines to provide a complete developer experience.

In addition to all of the features of OSS Redis, Redis stack supports:

- Queryable JSON documents

- Full-text search

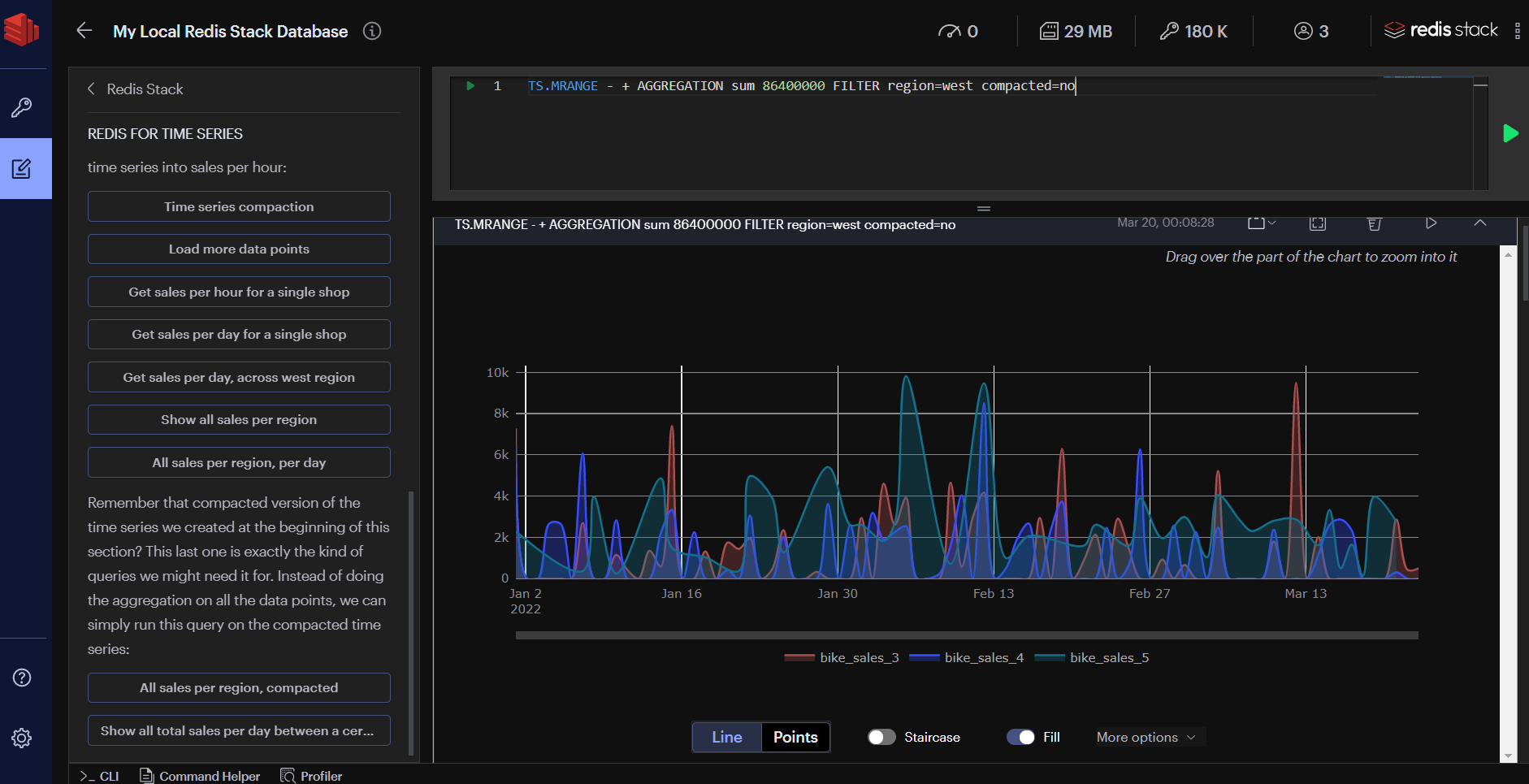

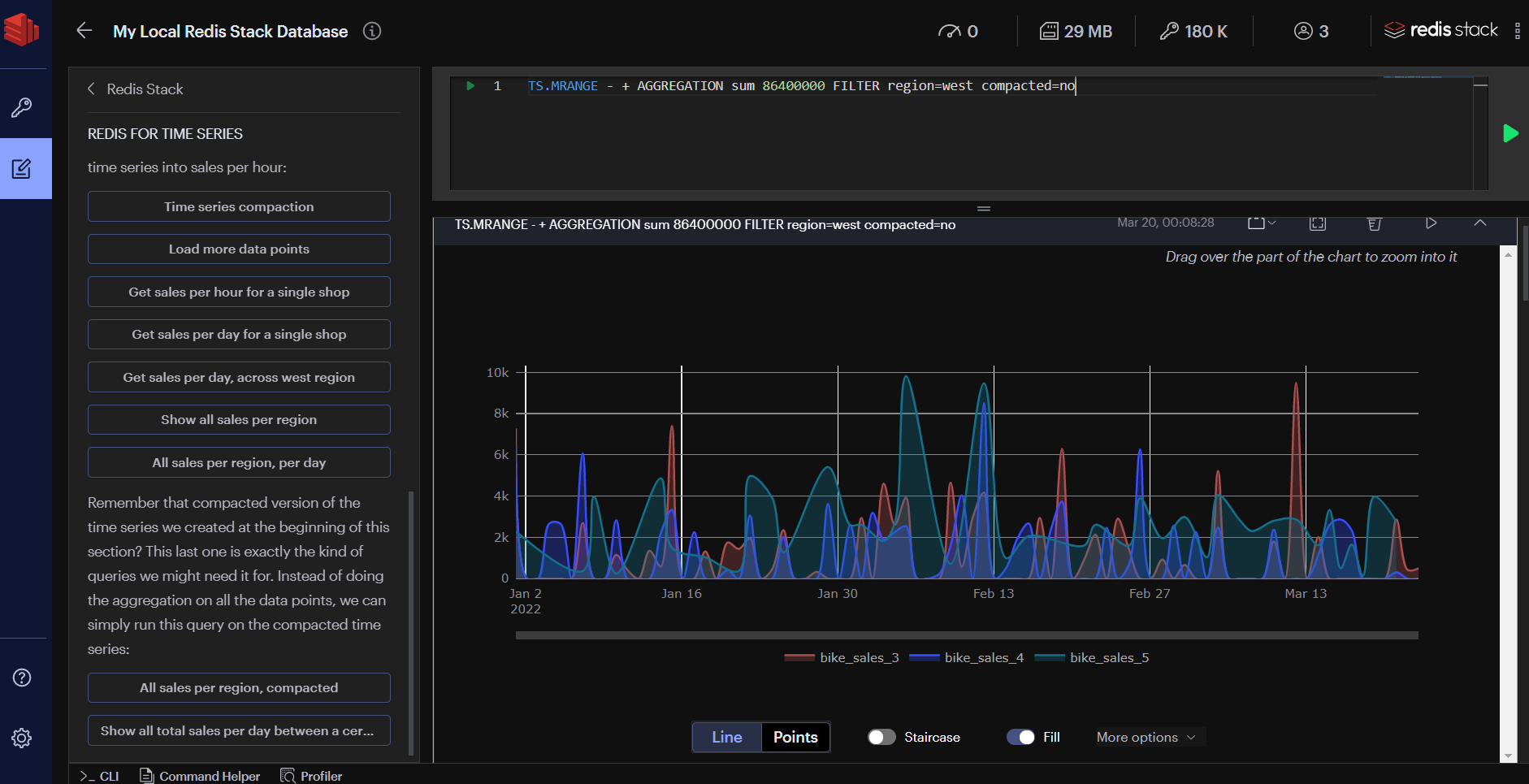

- Time series data (ingestion & querying)

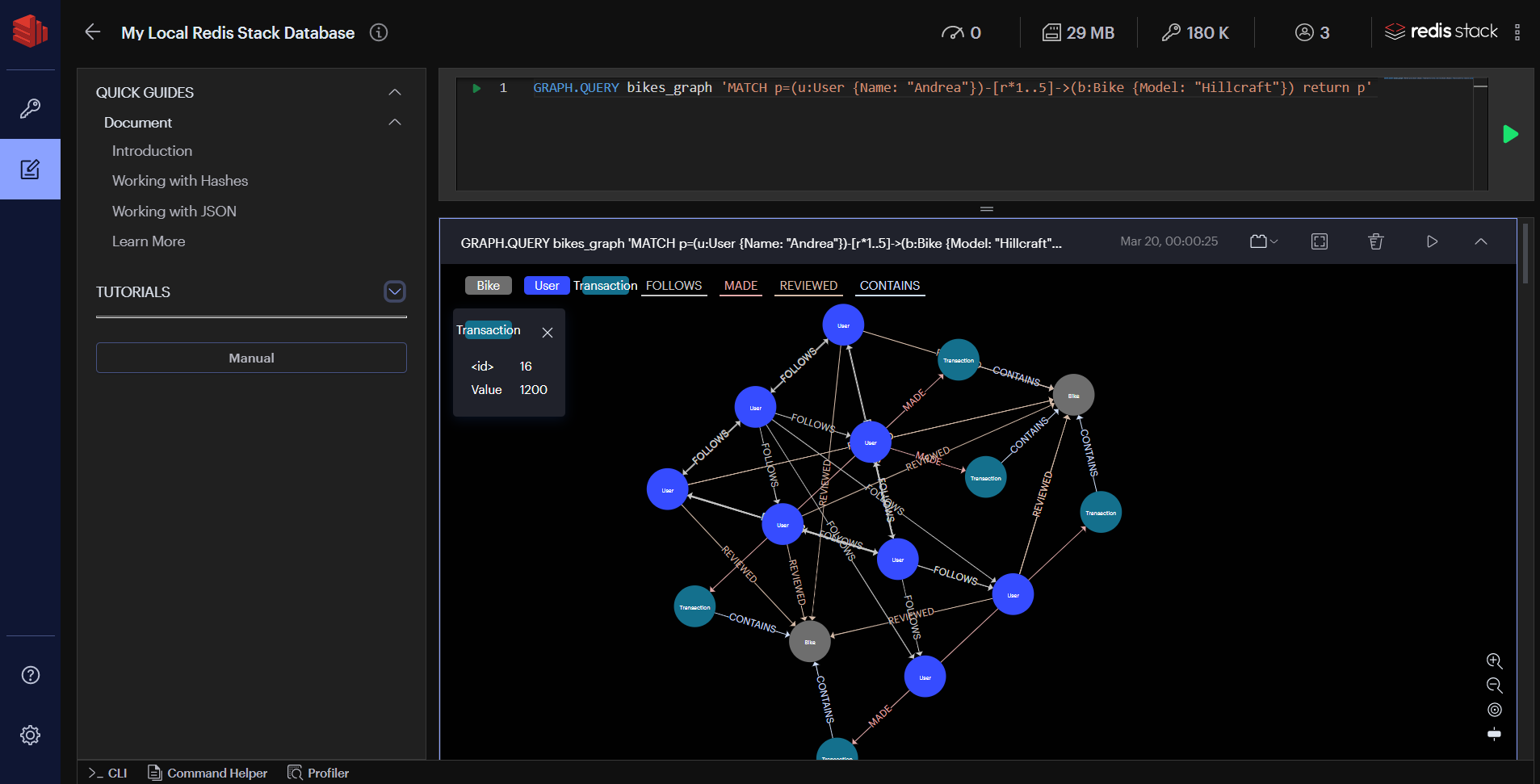

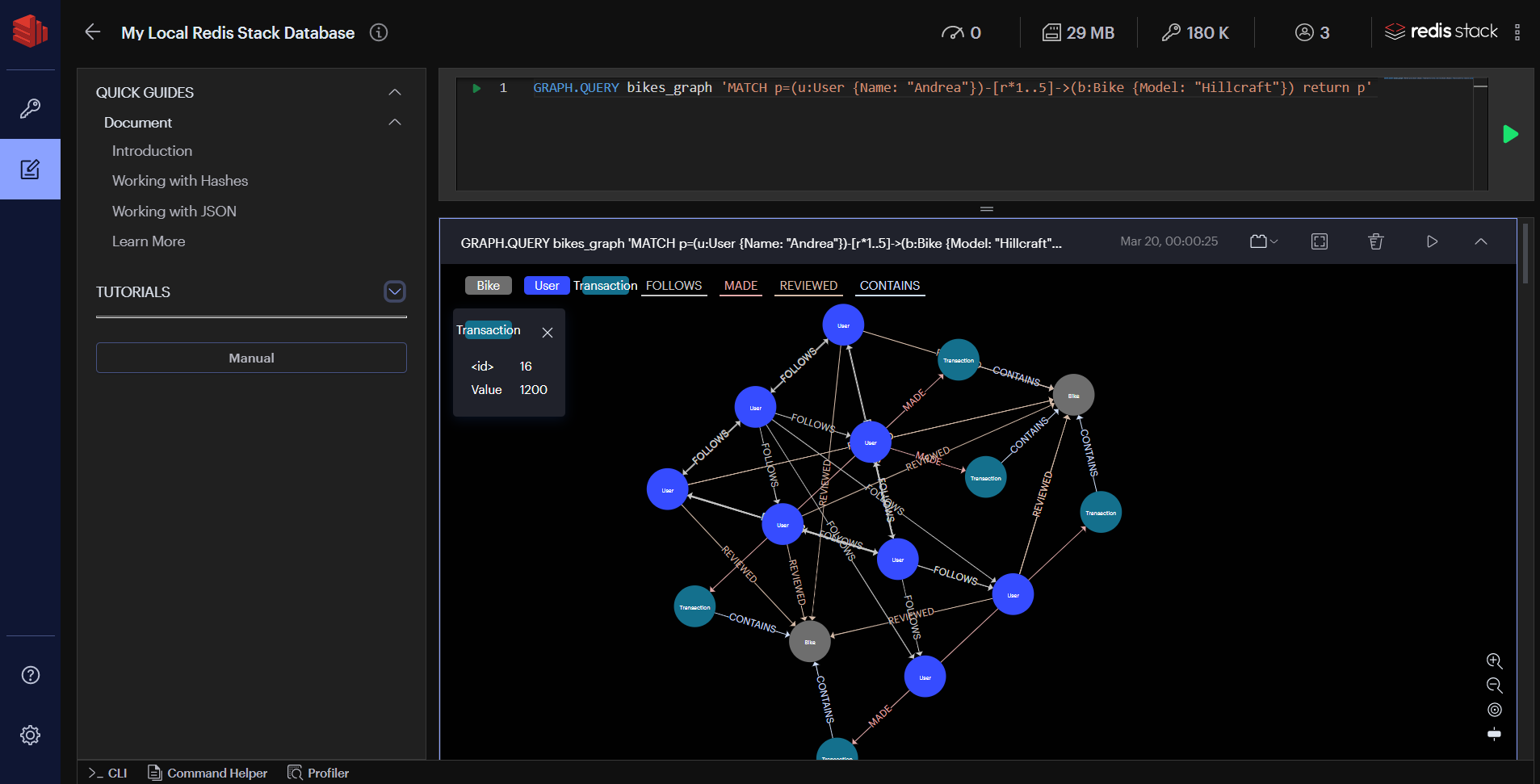

- Graph data models with the Cypher query language

- Probabilistic data structures

Getting started

To get started with Redis Stack, see the Getting Started guide. You may also want to:

If you want to learn more about the vision for Redis Stack, read on.

Why Redis Stack?

Redis Stack was created to allow developers build to real-time applications with a back end data platform that can reliably process requests in under a millisecond. Redis Stack does this by extending Redis with modern data models and data processing tools (Document, Graph, Search, and Time Series).

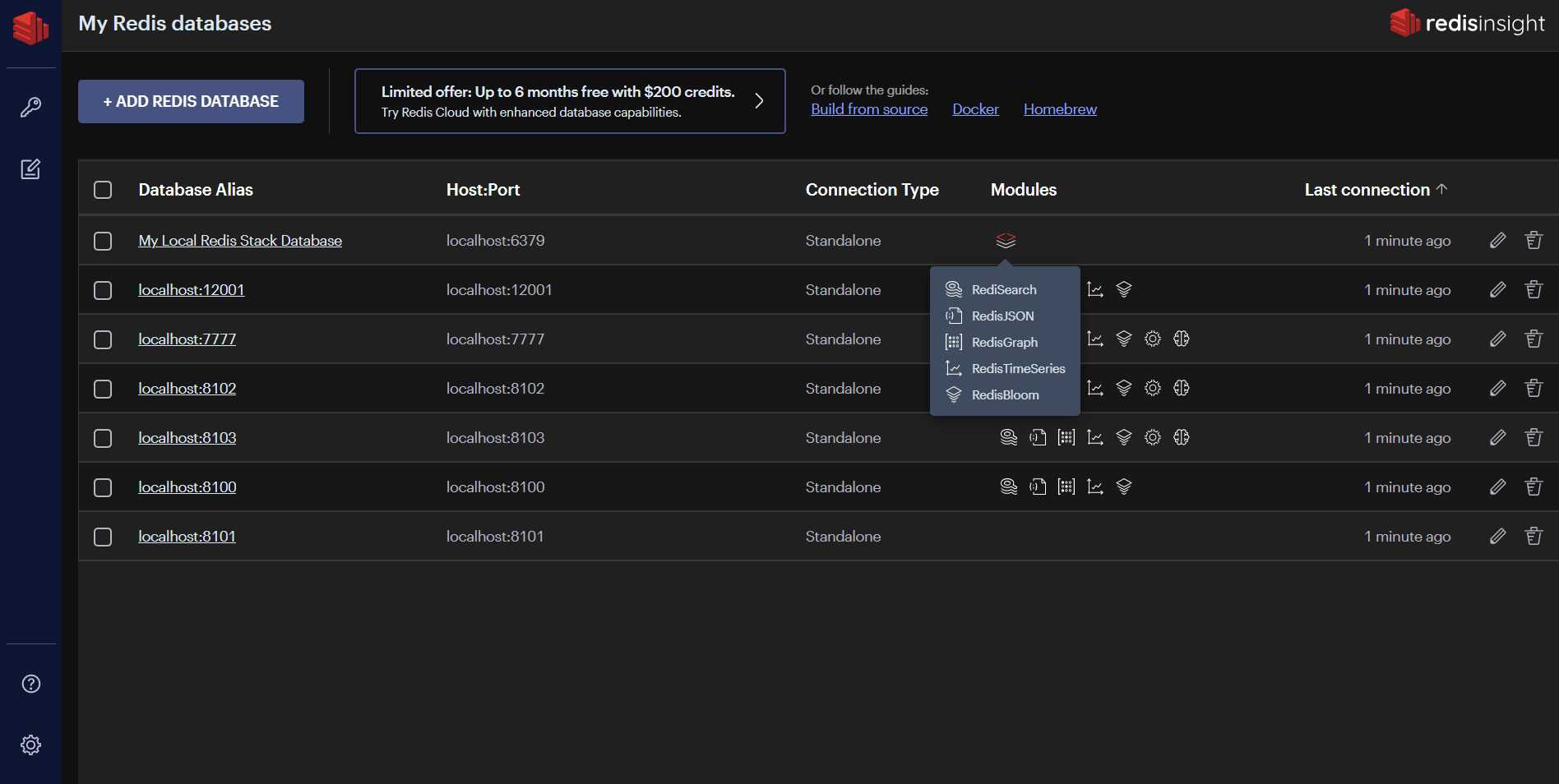

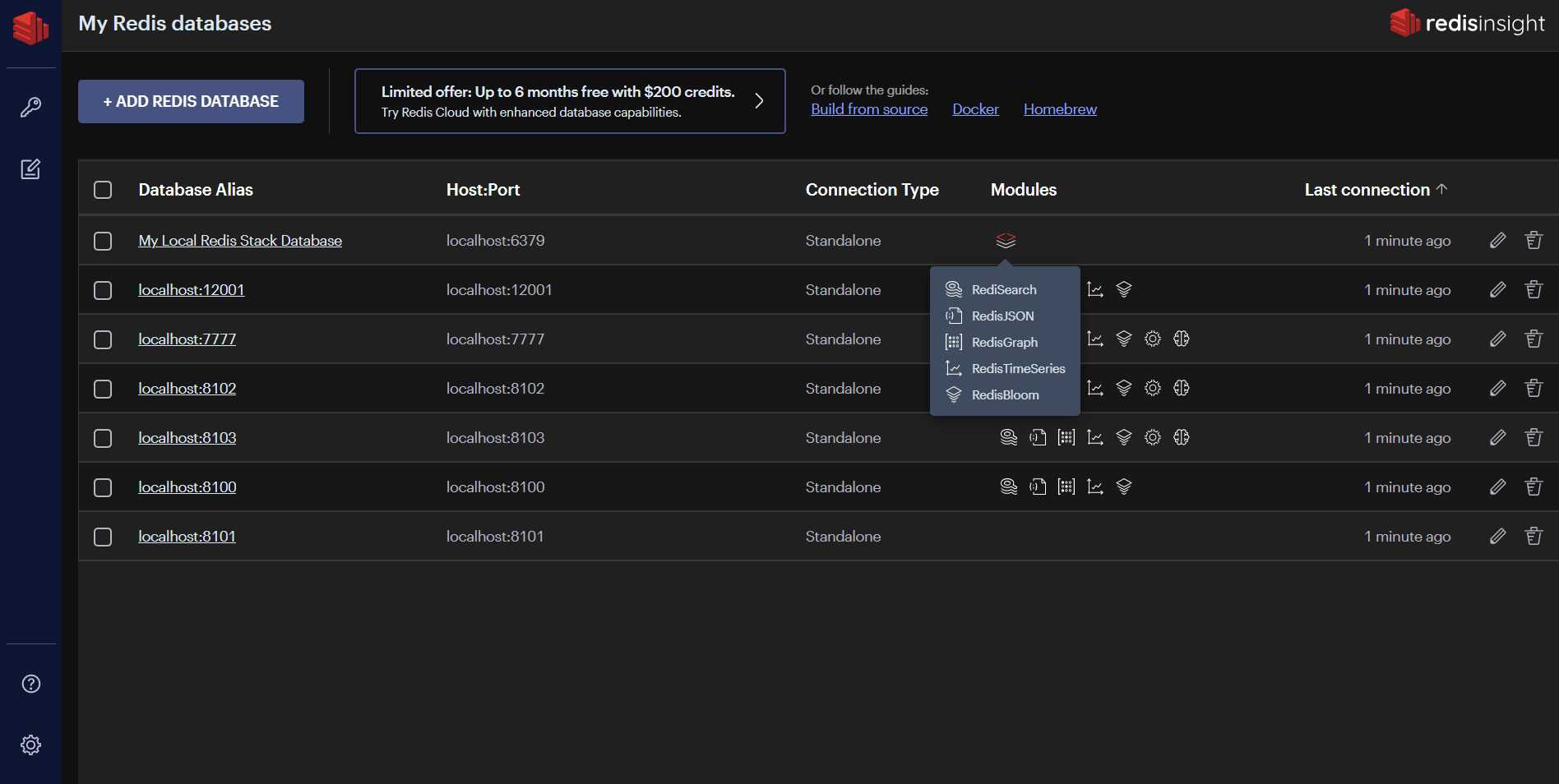

Redis Stack unifies and simplifies the developer experience of the leading Redis modules and the capabilities they provide. Redis Stack bundles five Redis modules: RedisJSON, RedisSearch, RedisGraph, RedisTimeSeries, and RedisBloom.

Clients

Several Redis client libraries support Redis Stack. These include redis-py, node_redis, and Jedis. In addition, four higher-level object mapping libraries also support Redis Stack: Redis OM .NET, Redis OM Node, Redis OM Python, Redis OM Spring.

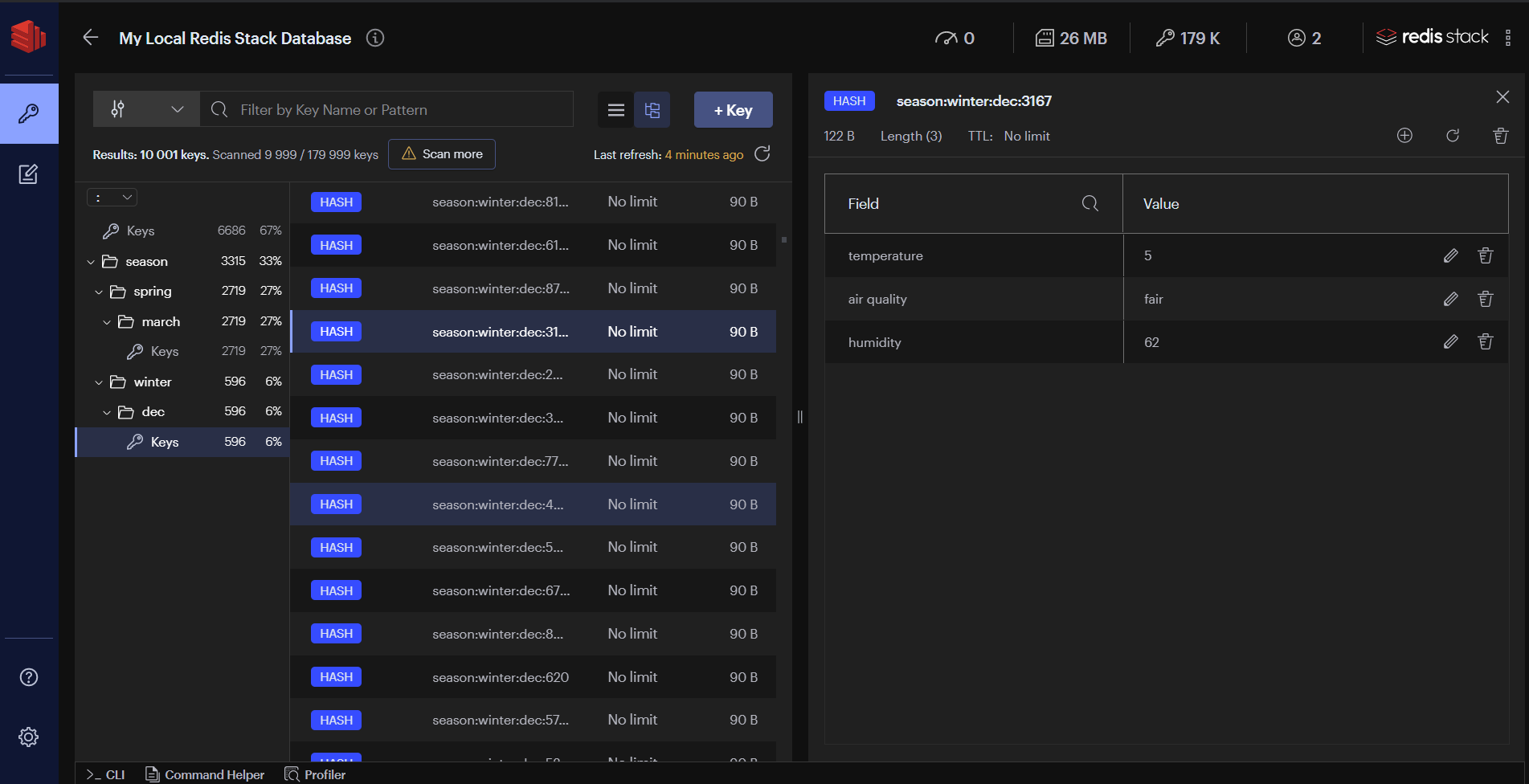

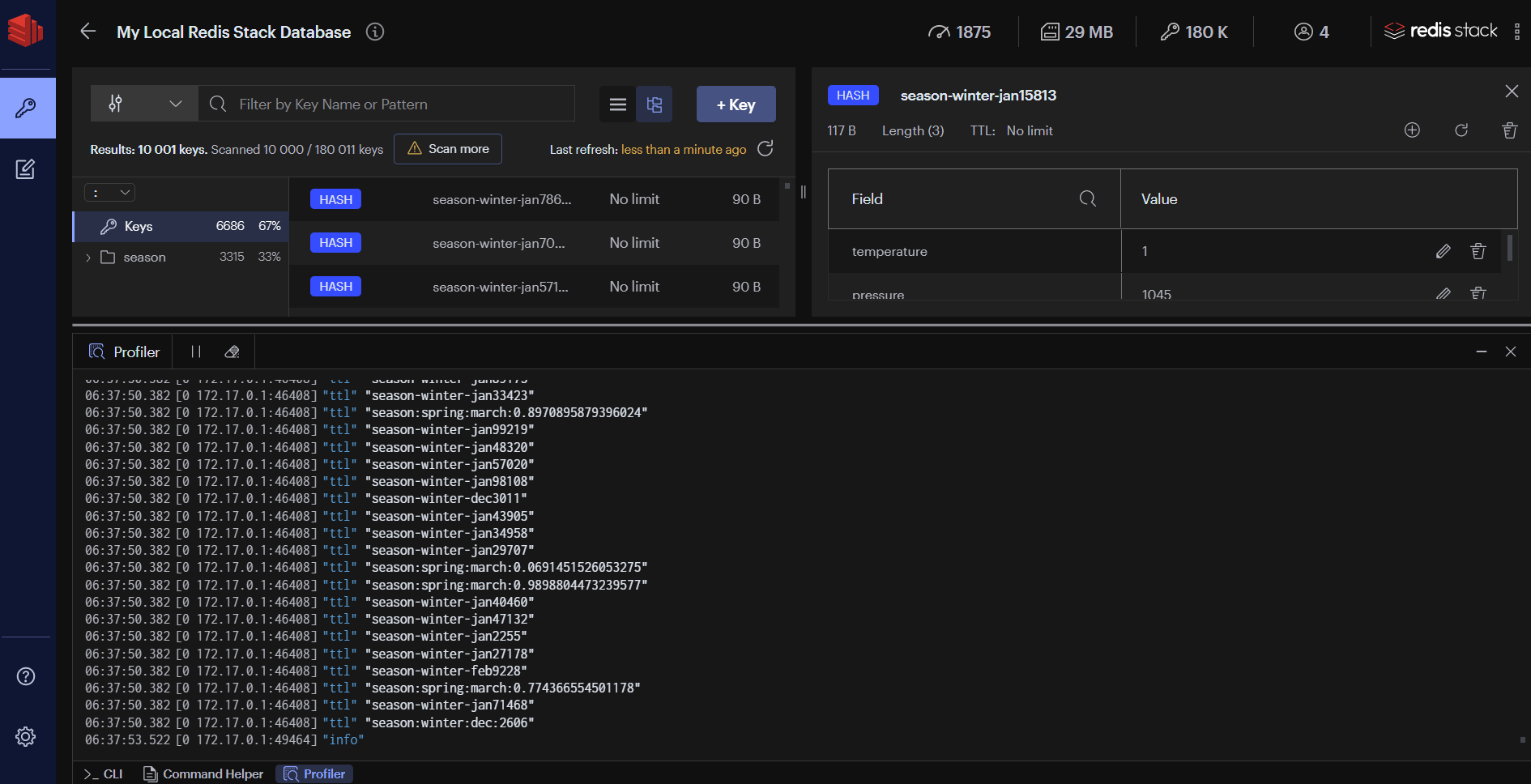

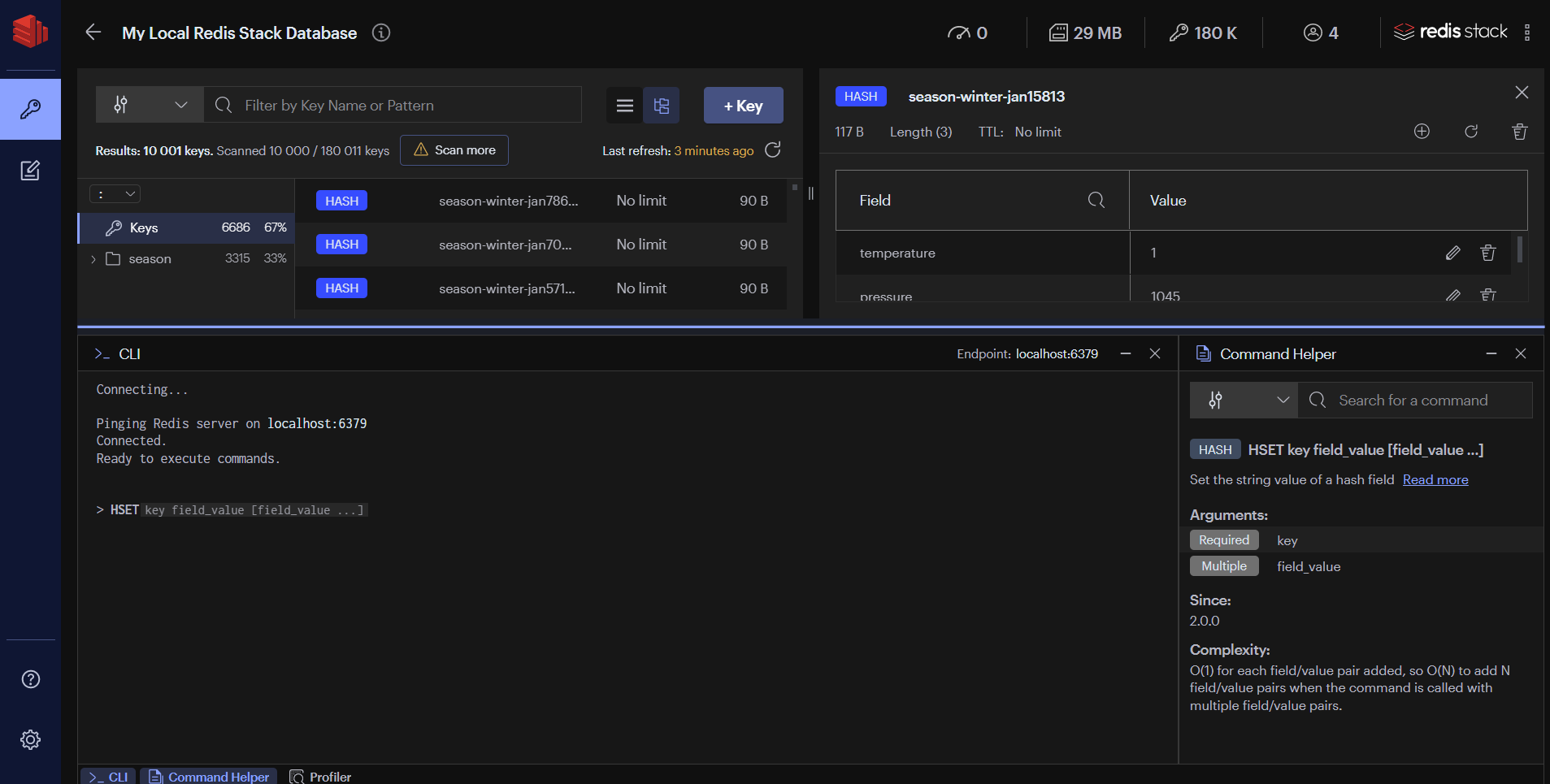

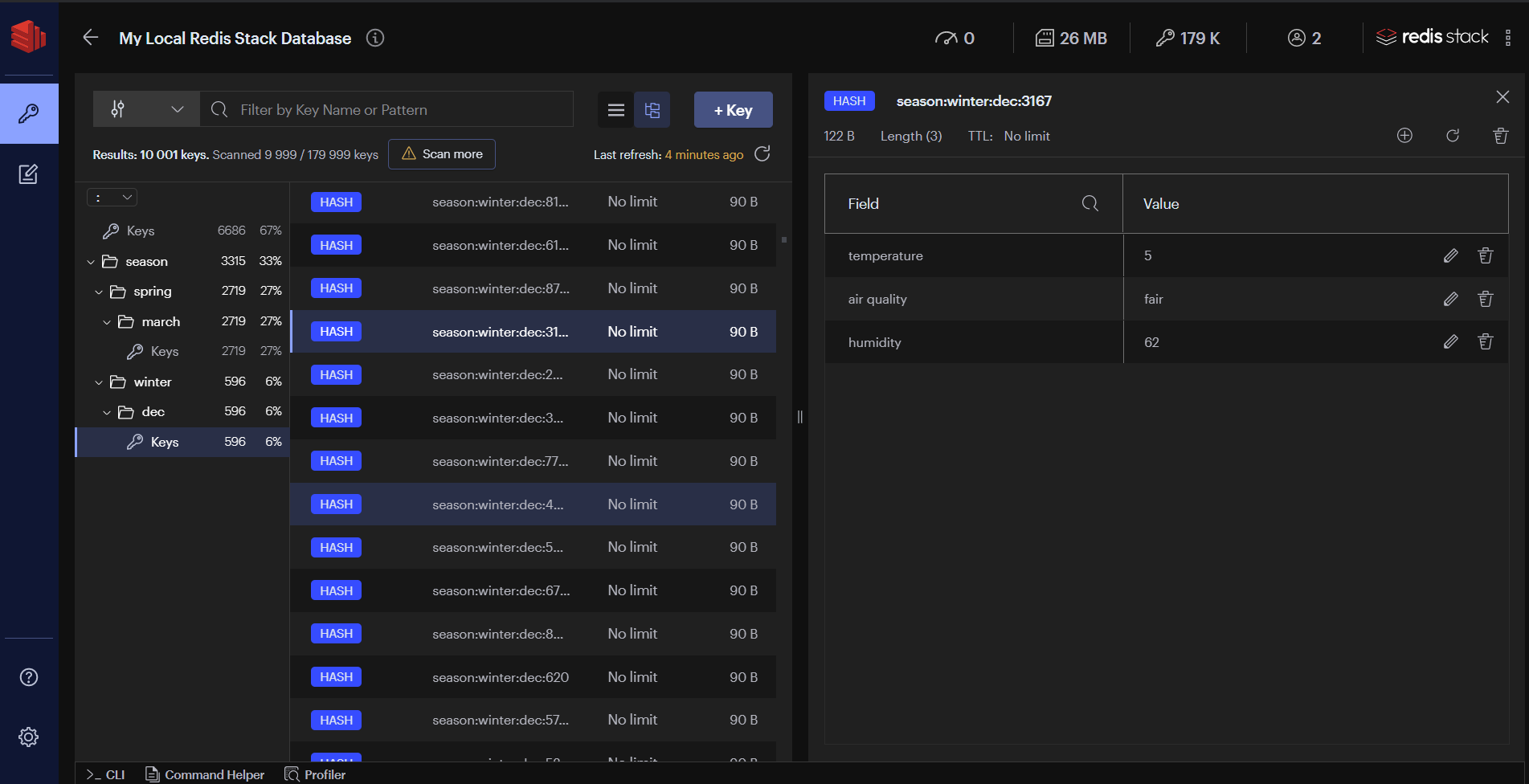

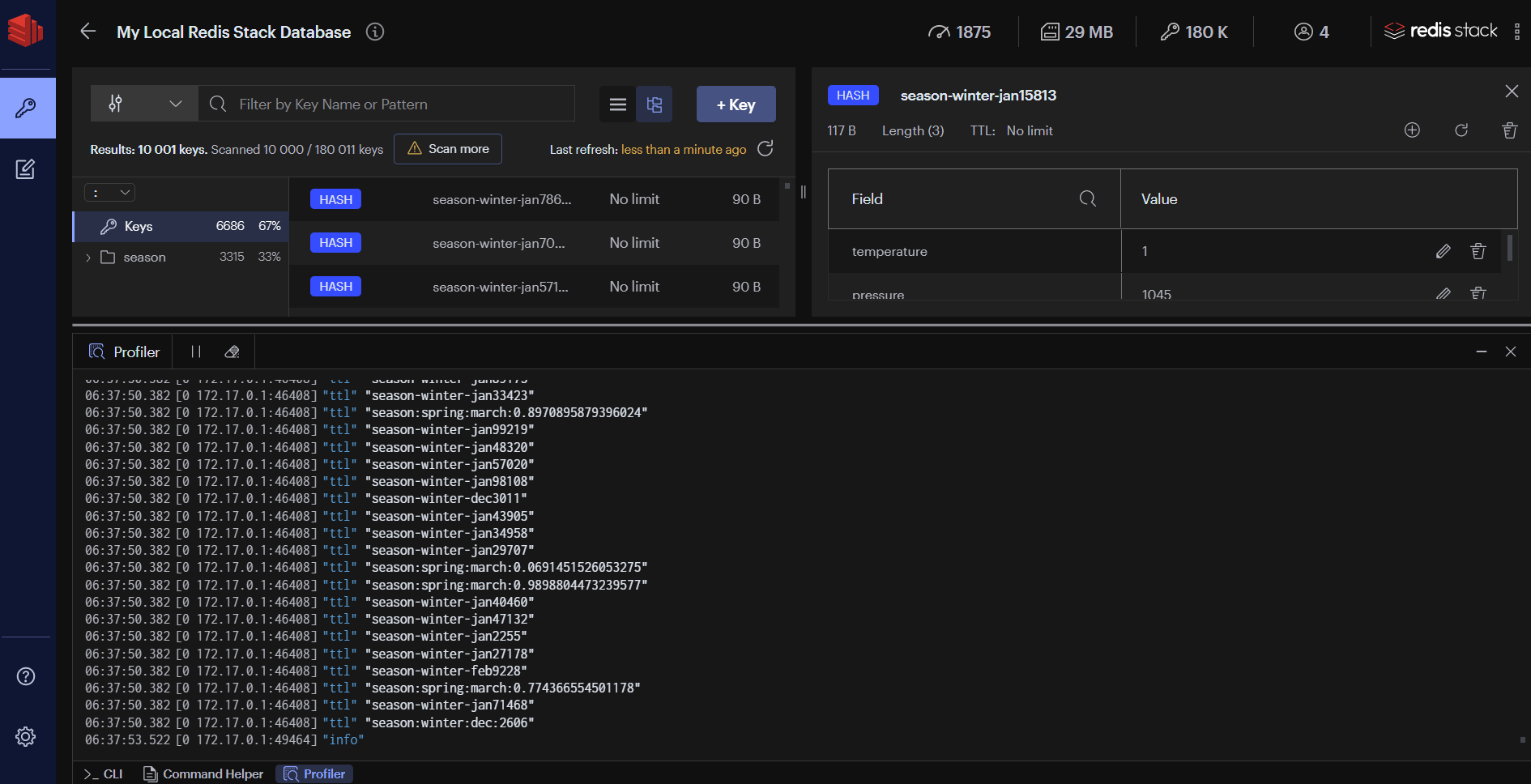

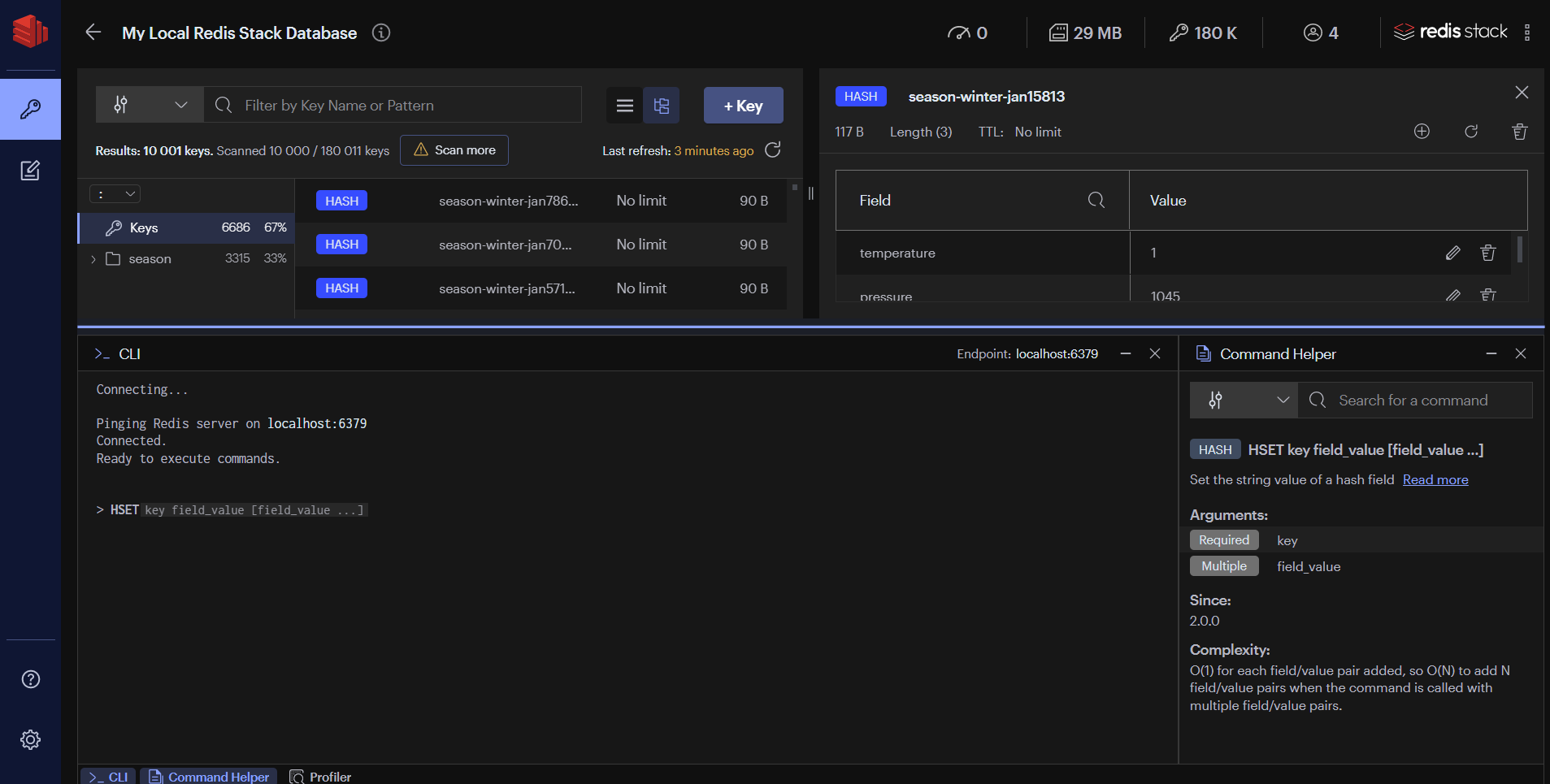

RedisInsight

Redis Stack also includes RedisInsight, a visualization tool for understanding and optimizing Redis data.

Redis Stack license

Redis Stack is made up of several components, licensed as follows:

1 - Get started with Redis Stack

How to install and get started with Redis Stack

1.1 - Install Redis Stack

How to install Redis Stack

1.1.1 - Install Redis Stack with binaries

How to install Redis Stack using tarballs

Start Redis Stack Server

After untarring or unzipping your redis-stack-server download, you can start Redis Stack Server as follows:

/path/to/redis-stack-server/bin/redis-stack-server

Add the binaries to your PATH

You can add the redis-stack-server binaries to your $PATH as follows:

Open the file ~/.bashrc or '~/zshrc` (depending on your shell), and add the following lines.

export PATH=/path/to/redis-stack-server/bin:$PATH

If you have an existing Redis installation on your system, then you can choose override those override those PATH variables as before, or you can choose to only add redis-stack-server binary as follows:

export PATH=/path/to/redis-stack-server/bin/redis-stack-server:$PATH

Now you can start Redis Stack Server as follows:

1.1.2 - Run Redis Stack on Docker

How to install Redis Stack using Docker

To get started with Redis Stack using Docker, you first need to select a Docker image:

redis/redis-stack contains both Redis Stack server and RedisInsight. This container is best for local development because you can use the embedded RedisInsight to visualize your data.

redis/redis-stack-server provides Redis Stack server only. This container is best for production deployment.

Getting started

redis/redis-stack-server

To start Redis Stack server using the redis-stack-server image, run the following command in your terminal:

docker run -d --name redis-stack-server -p 6379:6379 redis/redis-stack-server:latest

You can that the Redis Stack server database to your RedisInsight desktop applicaiton.

redis/redis-stack

To start Redis Stack developer container using the redis-stack image, run the following command in your terminal:

docker run -d --name redis-stack -p 6379:6379 -p 8001:8001 redis/redis-stack:latest

The docker run command above also exposes RedisInsight on port 8001. You can use RedisInsight by pointing your browser to http://localhost:8001.

Connect with redis-cli

You can then connect to the server using redis-cli, just as you connect to any Redis instance.

If you don’t have redis-cli installed locally, you can run it from the Docker container:

$ docker exec -it redis-stack redis-cli

Configuration

Persistence

To persist your Redis data to a local path, specify -v to configure a local volume. This command stores all data in the local directory local-data:

$ docker run -v /local-data/:/data redis/redis-stack:latest

Ports

If you want to expose Redis Stack server or RedisInsight on a different port, update the left hand of portion of the -p argument. This command exposes Redis Stack server on port 10001 and RedisInsight on port 13333:

$ docker run -p 10001:6379 -p 13333:8001 redis/redis-stack:latest

Config files

By default, the Redis Stack Docker containers use internal configuration files for Redis. To start Redis with local configuration file, you can use the -v volume options:

$ docker run -v `pwd`/local-redis-stack.conf:/redis-stack.conf -p 6379:6379 -p 8001:8001 redis/redis-stack:latest

Environment variables

To pass in arbitrary configuration changes, you can set any of these environment variables:

REDIS_ARGS: extra arguments for Redis

REDISEARCH_ARGS: arguments for RediSearch

REDISJSON_ARGS: arguments for RedisJSON

REDISGRAPH_ARGS: arguments for RedisGraph

REDISTIMESERIES_ARGS: arguments for RedisTimeSeries

REDISBLOOM_ARGS: arguments for RedisBloom

For example, here's how to use the REDIS_ARGS environment variable to pass the requirepass directive to Redis:

docker run -e REDIS_ARGS="--requirepass redis-stack" redis/redis-stack:latest

Here's how to set a retention policy for RedisTimeSeries:

docker run -e REDISTIMESERIES_ARGS="RETENTION_POLICY=20" redis/redis-stack:latest

1.1.3 - Install Redis Stack on Linux

How to install Redis Stack on Linux

From the official Debian/Ubuntu APT Repository

You can install recent stable versions of Redis Stack from the official packages.redis.io APT repository. The repository currently supports Ubuntu Xenial (16.04), Ubuntu Bionic (18.04), and Ubuntu Focal (20.04). Add the repository to the apt index, update it and install:

curl -fsSL https://packages.redis.io/gpg | sudo gpg --dearmor -o /usr/share/keyrings/redis-archive-keyring.gpg

echo "deb [signed-by=/usr/share/keyrings/redis-archive-keyring.gpg] https://packages.redis.io/deb $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/redis.list

sudo apt-get update

sudo apt-get install redis-stack-server

From the official offical RPM Feed

You can install recent stable versions of Redis Stack from the official packages.redis.io YUM repository. The repository currently supports RHEL7/CentOS7, and RHEL8/Centos8. Add the repository to the repository index, and install the package.

Create the file /etc/yum.repos.d/redis.repo with the following contents

[Redis]

name=Redis

baseurl=http://packages.redis.io/rpm/rhel7

enabled=1

gpgcheck=1

curl -fsSL https://packages.redis.io/gpg > /tmp/redis.key

sudo rpm --import /tmp/redis.key

sudo yum install epel-release

sudo yum install redis-stack-server

1.1.4 - Install Redis Stack on macOS

How to install Redis Stack on macOS

To install Redis Stack on macOS, use Homebrew. Make sure that you have Homebrew installed before starting on the installation instructions below.

There are three brew casks available.

redis-stack contains both redis-stack-server and redis-stack-redisinsight casks.redis-stack-server provides Redis Stack server only.redis-stack-redisinsight contains RedisInsight.

Install using Homebrew

First, tap the Redis Stack Homebrew tap:

brew tap redis-stack/redis-stack

Next, run brew install:

The redis-stack-server cask will install all Redis and Redis Stack binaries. How you run these binaries depends on whether you already have Redis installed on your system.

First-time Redis installation

If this is the first time you've installed Redis on your system, then all Redis Stack binaries be installed and accessible from the $PATH. On M1 Macs, this assumes that /opt/homebrew/bin is in your path. On Intel-based Macs, /usr/local/bin should be in the $PATH.

To check this, run:

Then, confirm that the output contains /opt/homebrew/bin (M1 Mac) or /usr/local/bin (Intel Mac). If these directories are not in the output, see the "Existing Redis installation" instructions below.

Existing Redis installation

If you have an existing Redis installation on your system, then might want to modify your $PATH to ensure that you're using the latest Redis Stack binaries.

Open the file ~/.bashrc or '~/zshrc` (depending on your shell), and add the following lines.

For Intel-based Macs:

export PATH=/usr/local/Caskroom/redis-stack-server/<VERSION>/bin:$PATH

For M1 Macs:

export PATH=/opt/homebrew/Caskroom/redis-stack-server/<VERSION>/bin:$PATH

In both cases, replace <VERSION> with your version of Redis Stack. For example, with version 6.2.0, path is as follows:

export PATH=/opt/homebrew/Caskroom/redis-stack-server/6.2.0/bin:$PATH

Start Redis Stack Server

You can now start Redis Stack Server as follows:

Installing Redis after installing Redis Stack

If you've already installed Redis Stack with Homebrew and then try to install Redis with brew install redis, you may encounter errors like the following:

Error: The brew link step did not complete successfully

The formula built, but is not symlinked into /usr/local

Could not symlink bin/redis-benchmark

Target /usr/local/bin/redis-benchmark

already exists. You may want to remove it:

rm '/usr/local/bin/redis-benchmark'

To force the link and overwrite all conflicting files:

brew link --overwrite redis

To list all files that would be deleted:

brew link --overwrite --dry-run redis

In this case, you can overwrite the Redis binaries installed by Redis Stack by running:

brew link --overwrite redis

However, Redis Stack Server will still be installed. To uninstall Redis Stack Server, see below.

Uninstall Redis Stack

To uninstall Redis Stack, run:

brew uninstall redis-stack-redisinsight redis-stack-server redis-stack

brew untap redis-stack/redis-stack

1.2 - Redis Stack clients

Client libraries supporting Redis Stack

Redis Stack is built on Redis and uses the same client protocol as Redis. As a result, most Redis client libraries work with Redis Stack. But some client libraries provide a more complete developer experience.

To meaningfully support Redis Stack support, a client library must provide an API for the commands exposed by Redis Stack. Core client libraries generally provide one method per Redis Stack command. High-level libraries provide abstractions that may make use of multiple commands.

Core client libraries

The following core client libraries support Redis Stack:

High-level client libraries

The Redis OM client libraries let you use the document modeling, indexing, and querying capabilities of Redis Stack much like the way you'd use an ORM. The following Redis OM libraries support Redis Stack:

1.3 - Redis Stack tutorials

Learn how to write code against Redis Stack

1.3.1 - Redis OM .NET

Learn how to build with Redis Stack and .NET

Redis OM .NET is a purpose-built library for handling documents in Redis Stack. In this tutorial, we'll build a simple ASP.NET Core Web-API app for performing CRUD operations on a simple Person & Address model, and we'll accomplish all of this with Redis OM .NET.

Prerequisites

- .NET 6 SDK

- And IDE for writing .NET (Visual Studio, Rider, Visual Studio Code)

- Optional: Docker Desktop for running redis-stack in docker for local testing.

Skip to the code

If you want to skip this tutorial and just jump straight into code, all the source code is available in GitHub

Run Redis Stack

There are a variety of ways to run Redis Stack. One way is to use the docker image:

docker run -d -p 6379:6379 -p 8001:8001 redis/redis-stack

Create the project

To create the project, just run:

dotnet new webapi -n Redis.OM.Skeleton --no-https --kestrelHttpPort 5000

Then open the Redis.OM.Skeleton.csproj file in your IDE of choice.

Add a "REDIS_CONNECTION_STRING" field to your appsettings.jsonfile to configure the application. Set that connection string to be the URI of your Redis instance. If using the docker command mentioned earlier, your connection string will beredis://localhost:6379`.

Create the model

Now it's time to create the Person/Address model that the app will use for storing/retrieving people. Create a new directory called Model and add the files Address.cs and Person.cs to it. In Address.cs, add the following:

using Redis.OM.Modeling;

namespace Redis.OM.Skeleton.Model;

public class Address

{

[Indexed]

public int? StreetNumber { get; set; }

[Indexed]

public string? Unit { get; set; }

[Searchable]

public string? StreetName { get; set; }

[Indexed]

public string? City { get; set; }

[Indexed]

public string? State { get; set; }

[Indexed]

public string? PostalCode { get; set; }

[Indexed]

public string? Country { get; set; }

[Indexed]

public GeoLoc Location { get; set; }

}

Here, you'll notice that except StreetName, marked as Searchable, all the fields are decorated with the Indexed attribute. These attributes (Searchable and Indexed) tell Redis OM that you want to be able to use those fields in queries when querying your documents in Redis Stack. Address will not be a Document itself, so the top-level class is not decorated with anything; instead, the Address model will be embedded in our Person model.

To that end, add the following to Person.cs

using Redis.OM.Modeling;

namespace Redis.OM.Skeleton.Model;

[Document(StorageType = StorageType.Json, Prefixes = new []{"Person"})]

public class Person

{

[RedisIdField] [Indexed]public string? Id { get; set; }

[Indexed] public string? FirstName { get; set; }

[Indexed] public string? LastName { get; set; }

[Indexed] public int Age { get; set; }

[Searchable] public string? PersonalStatement { get; set; }

[Indexed] public string[] Skills { get; set; } = Array.Empty<string>();

[Indexed(CascadeDepth = 1)] Address? Address { get; set; }

}

There are a few things to take note of here:

[Document(StorageType = StorageType.Json, Prefixes = new []{"Person"})] Indicates that the data type that Redis OM will use to store the document in Redis is JSON and that the prefix for the keys for the Person class will be Person.

[Indexed(CascadeDepth = 1)] Address? Address { get; set; } is one of two ways you can index an embedded object with Redis OM. This way instructs the index to cascade to the objects in the object graph, CascadeDepth of 1 means that it will traverse just one level, indexing the object as if it were building the index from scratch. The other method uses the JsonPath property of the individual indexed fields you want to search for. This more surgical approach limits the size of the index.

the Id property is marked as a RedisIdField. This denotes the field as one that will be used to generate the document's key name when it's stored in Redis.

Create the Index

With the model built, the next step is to create the index in Redis. The most correct way to manage this is to spin the index creation out into a Hosted Service, which will run which the app spins up. Create a' HostedServices' directory and add IndexCreationService.cs to that. In that file, add the following, which will create the index on startup.

using Redis.OM.Skeleton.Model;

namespace Redis.OM.Skeleton.HostedServices;

public class IndexCreationService : IHostedService

{

private readonly RedisConnectionProvider _provider;

public IndexCreationService(RedisConnectionProvider provider)

{

_provider = provider;

}

public async Task StartAsync(CancellationToken cancellationToken)

{

await _provider.Connection.CreateIndexAsync(typeof(Person));

}

public Task StopAsync(CancellationToken cancellationToken)

{

return Task.CompletedTask;

}

}

Inject the RedisConnectionProvider

Redis OM uses the RedisConnectionProvider class to handle connections to Redis and provides the classes you can use to interact with Redis. To use it, simply inject an instance of the RedisConnectionProvider into your app. In your Program.cs file, add:

builder.Services.AddSingleton(new RedisConnectionProvider(builder.Configuration["REDIS_CONNECTION_STRING"]));

This will pull your connection string out of the config and initialize the provider. The provider will now be available in your controllers/services to use.

Create the PeopleController

The final puzzle piece is to write the actual API controller for our People API. In the controllers directory, add the file PeopleController.cs, the skeleton of the PeopleControllerclass will be:

using Microsoft.AspNetCore.Mvc;

using Redis.OM.Searching;

using Redis.OM.Skeleton.Model;

namespace Redis.OM.Skeleton.Controllers;

[ApiController]

[Route("[controller]")]

public class PeopleController : ControllerBase

{

}

Inject the RedisConnectionProvider

To interact with Redis, inject the RedisConnectionProvider. During this dependency injection, pull out a RedisCollection<Person> instance, which will allow a fluent interface for querying documents in Redis.

private readonly RedisCollection<Person> _people;

private readonly RedisConnectionProvider _provider;

public PeopleController(RedisConnectionProvider provider)

{

_provider = provider;

_people = (RedisCollection<Person>)provider.RedisCollection<Person>();

}

Add route for creating a Person

The first route to add to the API is a POST request for creating a person, using the RedisCollection, it's as simple as calling InsertAsync, passing in the person object:

[HttpPost]

public async Task<Person> AddPerson([FromBody] Person person)

{

await _people.InsertAsync(person);

return person;

}

Add route to filter by age

The first filter route to add to the API will let the user filter by a minimum and maximum age. Using the LINQ interface available to the RedisCollection, this is a simple operation:

[HttpGet("filterAge")]

public IList<Person> FilterByAge([FromQuery] int minAge, [FromQuery] int maxAge)

{

return _people.Where(x => x.Age >= minAge && x.Age <= maxAge).ToList();

}

Filter by GeoLocation

Redis OM has a GeoLoc data structure, an instance of which is indexed by the Address model, with the RedisCollection, it's possible to find all objects with a radius of particular position using the GeoFilter method along with the field you want to filter:

[HttpGet("filterGeo")]

public IList<Person> FilterByGeo([FromQuery] double lon, [FromQuery] double lat, [FromQuery] double radius, [FromQuery] string unit)

{

return _people.GeoFilter(x => x.Address!.Location, lon, lat, radius, Enum.Parse<GeoLocDistanceUnit>(unit)).ToList();

}

Filter by exact string

When a string property in your model is marked as Indexed, e.g. FirstName and LastName, Redis OM can perform exact text matches against them. For example, the following two routes filter by PostalCode and name demonstrate exact string matches.

[HttpGet("filterName")]

public IList<Person> FilterByName([FromQuery] string firstName, [FromQuery] string lastName)

{

return _people.Where(x => x.FirstName == firstName && x.LastName == lastName).ToList();

}

[HttpGet("postalCode")]

public IList<Person> FilterByPostalCode([FromQuery] string postalCode)

{

return _people.Where(x => x.Address!.PostalCode == postalCode).ToList();

}

Filter with a full-text search

When a property in the model is marked as Searchable, like StreetAddress and PersonalStatement, you can perform a full-text search, see the filters for the PersonalStatement and StreetAddress:

[HttpGet("fullText")]

public IList<Person> FilterByPersonalStatement([FromQuery] string text){

return _people.Where(x => x.PersonalStatement == text).ToList();

}

[HttpGet("streetName")]

public IList<Person> FilterByStreetName([FromQuery] string streetName)

{

return _people.Where(x => x.Address!.StreetName == streetName).ToList();

}

Filter by array membership

When a string array or list is marked as Indexed, Redis OM can filter all the records containing a given string using the Contains method of the array or list. For example, our Person model has a list of skills you can query by adding the following route.

[HttpGet("skill")]

public IList<Person> FilterBySkill([FromQuery] string skill)

{

return _people.Where(x => x.Skills.Contains(skill)).ToList();

}

Updating a person

Updating a document in Redis Stack with Redis OM can be done by first materializing the person object, making your desired changes, and then calling Save on the collection. The collection is responsible for keeping track of updates made to entities materialized in it; therefore, it will track and apply any updates you make in it. For example, add the following route to update the age of a Person given their Id:

[HttpPatch("updateAge/{id}")]

public IActionResult UpdateAge([FromRoute] string id, [FromBody] int newAge)

{

foreach (var person in _people.Where(x => x.Id == id))

{

person.Age = newAge;

}

_people.Save();

return Accepted();

}

Delete a person

Deleting a document from Redis can be done with Unlink. All that's needed is to call Unlink, passing in the key name. Given an id, we can reconstruct the key name using the prefix and the id:

[HttpDelete("{id}")]

public IActionResult DeletePerson([FromRoute] string id)

{

_provider.Connection.Unlink($"Person:{id}");

return NoContent();

}

Run the app

All that's left to do now is to run the app and test it. You can do so by running dotnet run, the app is now exposed on port 5000, and there should be a swagger UI that you can use to play with the API at http://localhost:5000/swagger. There's a couple of scripts, along with some data files, to insert some people into Redis using the API in the GitHub repo

Viewing data in with Redis Insight

You can either install the Redis Insight GUI or use the Redis Insight GUI running on http://localhost:8001/.

You can view the data by following these steps:

- Accept the EULA

- Click the Add Redis Database button

- Enter your hostname and port name for your redis server. If you are using the docker image, this is

localhost and 6379 and give your database an alias

- Click

Add Redis Database.

Resources

- The source code for this tutorial can be found in GitHub.

- To learn more about Redis OM you can check out the the guide on Redis Developer

1.3.2 - Redis OM for Node.js

Learn how to build with Redis Stack and Node.js

This tutorial will show you how to build an API using Node.js and Redis Stack.

We'll be using Express and Redis OM to do this, and we assume that you have a basic understanding of Express.

The API we'll be building is a simple and relatively RESTful API that reads, writes, and finds data on persons: first name, last name, age, etc. We'll also add a simple location tracking feature just for a bit of extra interest.

But before we start with the coding, let's start with a description of what Redis OM is.

Redis OM for Node.js

Redis OM (pronounced REDiss OHM) is a library that provides object mapping for Redis—that's what the OM stands for... object mapping. It maps Redis data types — specifically Hashes and JSON documents — to JavaScript objects. And it allows you to search over these Hashes and JSON documents. It uses RedisJSON and RediSearch to do this.

RedisJSON and RediSearch are two of the modules included in Redis Stack. Modules are extensions to Redis that add new data types and new commands. RedisJSON adds a JSON document data type and the commands to manipulate it. RediSearch adds various search commands to index the contents of JSON documents and Hashes.

Redis OM comes in four different versions. We'll be working with Redis OM for Node.js in this tutorial, but there are also flavors and tutorials for Python, .NET, and Spring.

This tutorial will get you started with Redis OM for Node.js, covering the basics. But if you want to dive deep into all of Redis OM's capabilities, check out the README over on GitHub.

Prerequisites

Like anything software-related, you need to have some dependencies installed before you can get started:

- Node.js 14.8+: In this tutorial, we're using JavaScript's top-level

await feature which was introduced in Node 14.8. So, make sure you are using that version or later. - Redis Stack: You need a version of Redis Stack, either running locally on your machine or in the cloud.

- RedisInsight: We'll use this to look inside Redis and make sure our code is doing what we think it's doing.

Starter code

We're not going to code this completely from scratch. Instead, we've provided some starter code for you. Go ahead and clone it to a folder of your convenience:

git clone git@github.com:redis-developer/express-redis-om-workshop.git

Now that you have the starter code, let's explore it a bit. Opening up server.js in the root we see that we have a simple Express app that uses Dotenv for configuration and Swagger UI Express for testing our API:

import 'dotenv/config'

import express from 'express'

import swaggerUi from 'swagger-ui-express'

import YAML from 'yamljs'

/* create an express app and use JSON */

const app = new express()

app.use(express.json())

/* set up swagger in the root */

const swaggerDocument = YAML.load('api.yaml')

app.use('/', swaggerUi.serve, swaggerUi.setup(swaggerDocument))

/* start the server */

app.listen(8080)

Alongside this is api.yaml, which defines the API we're going to build and provides the information Swagger UI Express needs to render its UI. You don't need to mess with it unless you want to add some additional routes.

The persons folder has some JSON files and a shell script. The JSON files are sample persons—all musicians because fun—that you can load into the API to test it. The shell script—load-data.sh—will load all the JSON files into the API using curl.

There are two empty folders, om and routers. The om folder is where all the Redis OM code will go. The routers folder will hold code for all of our Express routes.

The starter code is perfectly runnable if a bit thin. Let's configure and run it to make sure it works before we move on to writing actual code. First, get all the dependencies:

npm install

Then, set up a .env file in the root that Dotenv can make use of. There's a sample.env file in the root that you can copy and modify:

cp sample.env .env

The contents of .env looks like this:

# Put your local Redis Stack URL here. Want to run in the

# cloud instead? Sign up at https://redis.com/try-free/.

REDIS_URL=redis://localhost:6379

There's a good chance this is already correct. However, if you need to change the REDIS_URL for your particular environment (e.g., you're running Redis Stack in the cloud), this is the time to do it. Once done, you should be able to run the app:

npm start

Navigate to http://localhost:8080 and check out the client that Swagger UI Express has created. None of it works yet because we haven't implemented any of the routes. But, you can try them out and watch them fail!

The starter code runs. Let's add some Redis OM to it so it actually does something!

Setting up a Client

First things first, let's set up a client. The Client class is the thing that knows how to talk to Redis on behalf of Redis OM. One option is to put our client in its own file and export it. This ensures that the application has one and only one instance of Client and thus only one connection to Redis Stack. Since Redis and JavaScript are both (more or less) single-threaded, this works neatly.

Let's create our first file. In the om folder add a file called client.js and add the following code:

import { Client } from 'redis-om'

/* pulls the Redis URL from .env */

const url = process.env.REDIS_URL

/* create and open the Redis OM Client */

const client = await new Client().open(url)

export default client

Remember that top-level await stuff we mentioned earlier? There it is!

Note that we are getting our Redis URL from an environment variable. It was put there by Dotenv and read from our .env file. If we didn't have the .env file or have a REDIS_URL property in our .env file, this code would gladly read this value from the actual environment variables.

Also note that the .open() method conveniently returns this. This this (can I say this again? I just did!) lets us chain the instantiation of the client with the opening of the client. If this isn't to your liking, you could always write it like this:

/* create and open the Redis OM Client */

const client = new Client()

await client.open(url)

Entity, Schema, and Repository

Now that we have a client that's connected to Redis, we need to start mapping some persons. To do that, we need to define an Entity and a Schema. Let's start by creating a file named person.js in the om folder and importing client from client.js and the Entity and Schema classes from Redis OM:

import { Entity, Schema } from 'redis-om'

import client from './client.js'

Entity

Next, we need to define an entity. An Entity is the class that holds you data when you work with it—the thing being mapped to. It is what you create, read, update, and delete. Any class that extends Entity is an entity. We'll define our Person entity with a single line:

/* our entity */

class Person extends Entity {}

Schema

A schema defines the fields on your entity, their types, and how they are mapped internally to Redis. By default, entities map to JSON documents. Let's create our Schema in person.js:

/* create a Schema for Person */

const personSchema = new Schema(Person, {

firstName: { type: 'string' },

lastName: { type: 'string' },

age: { type: 'number' },

verified: { type: 'boolean' },

location: { type: 'point' },

locationUpdated: { type: 'date' },

skills: { type: 'string[]' },

personalStatement: { type: 'text' }

})

When you create a Schema, it modifies the Entity class you handed it (Person in our case) adding getters and setters for the properties you define. The type those getters and setters accept and return are defined with the type parameter as shown above. Valid values are: string, number, boolean, string[], date, point, and text.

The first three do exactly what you think—they define a property that is a String, a Number, or a Boolean. string[] does what you'd think as well, specifically defining an Array of strings.

date is a little different, but still more or less what you'd expect. It defines a property that returns a Date and can be set using not only a Date but also a String containing an ISO 8601 date or a Number with the UNIX epoch time in milliseconds.

A point defines a point somewhere on the globe as a longitude and a latitude. It creates a property that returns and accepts a simple object with the properties of longitude and latitude. Like this:

let point = { longitude: 12.34, latitude: 56.78 }

A text field is a lot like a string. If you're just reading and writing objects, they are identical. But if you want to search on them, they are very, very different. We'll talk about search more later, but the tl;dr is that string fields can only be matched on their whole value—no partial matches—and are best for keys while text fields have full-text search enabled on them and are optimized for human-readable text.

Repository

Now we have all the pieces that we need to create a repository. A Repository is the main interface into Redis OM. It gives us the methods to read, write, and remove a specific Entity. Create a Repository in person.js and make sure it's exported as you'll need it when we start implementing out API:

/* use the client to create a Repository just for Persons */

export const personRepository = client.fetchRepository(personSchema)

We're almost done with setting up our repository. But we still need to create an index or we won't be able to search. We do that by calling .createIndex(). If an index already exists and it's identical, this function won't do anything. If it's different, it'll drop it and create a new one. Add a call to .createIndex() to person.js:

/* create the index for Person */

await personRepository.createIndex()

That's all we need for person.js and all we need to start talking to Redis using Redis OM. Here's the code in its entirety:

import { Entity, Schema } from 'redis-om'

import client from './client.js'

/* our entity */

class Person extends Entity {}

/* create a Schema for Person */

const personSchema = new Schema(Person, {

firstName: { type: 'string' },

lastName: { type: 'string' },

age: { type: 'number' },

verified: { type: 'boolean' },

location: { type: 'point' },

locationUpdated: { type: 'date' },

skills: { type: 'string[]' },

personalStatement: { type: 'text' }

})

/* use the client to create a Repository just for Persons */

export const personRepository = client.fetchRepository(personSchema)

/* create the index for Person */

await personRepository.createIndex()

Now, let's add some routes in Express.

Set up the Person Router

Let's create a truly RESTful API with the CRUD operations mapping to PUT, GET, POST, and DELETE respectively. We're going to do this using Express Routers as this makes our code nice and tidy. Create a file called person-router.js in the routers folder and in it import Router from Express and personRepository from person.js. Then create and export a Router:

import { Router } from 'express'

import { personRepository } from '../om/person.js'

export const router = Router()

Imports and exports done, let's bind the router to our Express app. Open up server.js and import the Router we just created:

/* import routers */

import { router as personRouter } from './routers/person-router.js'

Then add the personRouter to the Express app:

/* bring in some routers */

app.use('/person', personRouter)

Your server.js should now look like this:

import 'dotenv/config'

import express from 'express'

import swaggerUi from 'swagger-ui-express'

import YAML from 'yamljs'

/* import routers */

import { router as personRouter } from './routers/person-router.js'

/* create an express app and use JSON */

const app = new express()

app.use(express.json())

/* bring in some routers */

app.use('/person', personRouter)

/* set up swagger in the root */

const swaggerDocument = YAML.load('api.yaml')

app.use('/', swaggerUi.serve, swaggerUi.setup(swaggerDocument))

/* start the server */

app.listen(8080)

Now we can add our routes to create, read, update, and delete persons. Head back to the person-router.js file so we can do just that.

Creating a Person

We'll create a person first as you need to have persons in Redis before you can do any of the reading, writing, or removing of them. Add the PUT route below. This route will call .createAndSave() to create a Person from the request body and immediately save it to the Redis:

router.put('/', async (req, res) => {

const person = await personRepository.createAndSave(req.body)

res.send(person)

})

Note that we are also returning the newly created Person. Let's see what that looks like by actually calling our API using the Swagger UI. Go to http://localhost:8080 in your browser and try it out. The default request body in Swagger will be fine for testing. You should see a response that looks like this:

{

"entityId": "01FY9MWDTWW4XQNTPJ9XY9FPMN",

"firstName": "Rupert",

"lastName": "Holmes",

"age": 75,

"verified": false,

"location": {

"longitude": 45.678,

"latitude": 45.678

},

"locationUpdated": "2022-03-01T12:34:56.123Z",

"skills": [

"singing",

"songwriting",

"playwriting"

],

"personalStatement": "I like piña coladas and walks in the rain"

}

This is exactly what we handed it with one exception: the entityId. Every entity in Redis OM has an entity ID which is—as you've probably guessed—the unique ID of that entity. It was randomly generated when we called .createAndSave(). Yours will be different, so make note of it.

You can see this newly created JSON document in Redis with RedisInsight. Go ahead and launch RedisInsight and you should see a key with a name like Person:01FY9MWDTWW4XQNTPJ9XY9FPMN. The Person bit of the key was derived from the class name of our entity and the sequence of letters and numbers is our generated entity ID. Click on it to take a look at the JSON document you've created.

You'll also see a key named Person:index:hash. That's a unique value that Redis OM uses to see if it needs to recreate the index or not when .createIndex() is called. You can safely ignore it.

Reading a Person

Create down, let's add a GET route to read this newly created Person:

router.get('/:id', async (req, res) => {

const person = await personRepository.fetch(req.params.id)

res.send(person)

})

This code extracts a parameter from the URL used in the route—the entityId that we received previously. It uses the .fetch() method on the personRepository to retrieve a Person using that entityId. Then, it returns that Person.

Let's go ahead and test that in Swagger as well. You should get back exactly the same response. In fact, since this is a simple GET, we should be able to just load the URL into our browser. Test that out too by navigating to http://localhost:8080/person/01FY9MWDTWW4XQNTPJ9XY9FPMN, replacing the entity ID with your own.

Now that we can read and write, let's implement the REST of the HTTP verbs. REST... get it?

Updating a Person

Let's add the code to update a person using a POST route:

router.post('/:id', async (req, res) => {

const person = await personRepository.fetch(req.params.id)

person.firstName = req.body.firstName ?? null

person.lastName = req.body.lastName ?? null

person.age = req.body.age ?? null

person.verified = req.body.verified ?? null

person.location = req.body.location ?? null

person.locationUpdated = req.body.locationUpdated ?? null

person.skills = req.body.skills ?? null

person.personalStatement = req.body.personalStatement ?? null

await personRepository.save(person)

res.send(person)

})

This code fetches the Person from the personRepository using the entityId just like our previous route did. However, now we change all the properties based on the properties in the request body. If any of them are missing, we set them to null. Then, we call .save() and return the changed Person.

Let's test this in Swagger too, why not? Make some changes. Try removing some of the fields. What do you get back when you read it after you've changed it?

Deleting a Person

Deletion—my favorite! Remember kids, deletion is 100% compression. The route that deletes is just as straightforward as the one that reads, but much more destructive:

router.delete('/:id', async (req, res) => {

await personRepository.remove(req.params.id)

res.send({ entityId: req.params.id })

})

I guess we should probably test this one out too. Load up Swagger and exercise the route. You should get back JSON with the entity ID you just removed:

{

"entityId": "01FY9MWDTWW4XQNTPJ9XY9FPMN"

}

And just like that, it's gone!

All the CRUD

Do a quick check with what you've written so far. Here's what should be the totality of your person-router.js file:

import { Router } from 'express'

import { personRepository } from '../om/person.js'

export const router = Router()

router.put('/', async (req, res) => {

const person = await personRepository.createAndSave(req.body)

res.send(person)

})

router.get('/:id', async (req, res) => {

const person = await personRepository.fetch(req.params.id)

res.send(person)

})

router.post('/:id', async (req, res) => {

const person = await personRepository.fetch(req.params.id)

person.firstName = req.body.firstName ?? null

person.lastName = req.body.lastName ?? null

person.age = req.body.age ?? null

person.verified = req.body.verified ?? null

person.location = req.body.location ?? null

person.locationUpdated = req.body.locationUpdated ?? null

person.skills = req.body.skills ?? null

person.personalStatement = req.body.personalStatement ?? null

await personRepository.save(person)

res.send(person)

})

router.delete('/:id', async (req, res) => {

await personRepository.remove(req.params.id)

res.send({ entityId: req.params.id })

})

Preparing to search

CRUD completed, let's do some searching. In order to search, we need data to search over. Remember that persons folder with all the JSON documents and the load-data.sh shell script? Its time has arrived. Go into that folder and run the script:

cd persons

./load-data.sh

You should get a rather verbose response containing the JSON response from the API and the names of the files you loaded. Like this:

{"entityId":"01FY9Z4RRPKF4K9H78JQ3K3CP3","firstName":"Chris","lastName":"Stapleton","age":43,"verified":true,"location":{"longitude":-84.495,"latitude":38.03},"locationUpdated":"2022-01-01T12:00:00.000Z","skills":["singing","football","coal mining"],"personalStatement":"There are days that I can walk around like I'm alright. And I pretend to wear a smile on my face. And I could keep the pain from comin' out of my eyes. But sometimes, sometimes, sometimes I cry."} <- chris-stapleton.json

{"entityId":"01FY9Z4RS2QQVN4XFYSNPKH6B2","firstName":"David","lastName":"Paich","age":67,"verified":false,"location":{"longitude":-118.25,"latitude":34.05},"locationUpdated":"2022-01-01T12:00:00.000Z","skills":["singing","keyboard","blessing"],"personalStatement":"I seek to cure what's deep inside frightened of this thing that I've become"} <- david-paich.json

{"entityId":"01FY9Z4RSD7SQMSWDFZ6S4M5MJ","firstName":"Ivan","lastName":"Doroschuk","age":64,"verified":true,"location":{"longitude":-88.273,"latitude":40.115},"locationUpdated":"2022-01-01T12:00:00.000Z","skills":["singing","dancing","friendship"],"personalStatement":"We can dance if we want to. We can leave your friends behind. 'Cause your friends don't dance and if they don't dance well they're no friends of mine."} <- ivan-doroschuk.json

{"entityId":"01FY9Z4RSRZFGQ21BMEKYHEVK6","firstName":"Joan","lastName":"Jett","age":63,"verified":false,"location":{"longitude":-75.273,"latitude":40.003},"locationUpdated":"2022-01-01T12:00:00.000Z","skills":["singing","guitar","black eyeliner"],"personalStatement":"I love rock n' roll so put another dime in the jukebox, baby."} <- joan-jett.json

{"entityId":"01FY9Z4RT25ABWYTW6ZG7R79V4","firstName":"Justin","lastName":"Timberlake","age":41,"verified":true,"location":{"longitude":-89.971,"latitude":35.118},"locationUpdated":"2022-01-01T12:00:00.000Z","skills":["singing","dancing","half-time shows"],"personalStatement":"What goes around comes all the way back around."} <- justin-timberlake.json

{"entityId":"01FY9Z4RTD9EKBDS2YN9CRMG1D","firstName":"Kerry","lastName":"Livgren","age":72,"verified":false,"location":{"longitude":-95.689,"latitude":39.056},"locationUpdated":"2022-01-01T12:00:00.000Z","skills":["poetry","philosophy","songwriting","guitar"],"personalStatement":"All we are is dust in the wind."} <- kerry-livgren.json

{"entityId":"01FY9Z4RTR73HZQXK83JP94NWR","firstName":"Marshal","lastName":"Mathers","age":49,"verified":false,"location":{"longitude":-83.046,"latitude":42.331},"locationUpdated":"2022-01-01T12:00:00.000Z","skills":["rapping","songwriting","comics"],"personalStatement":"Look, if you had, one shot, or one opportunity to seize everything you ever wanted, in one moment, would you capture it, or just let it slip?"} <- marshal-mathers.json

{"entityId":"01FY9Z4RV2QHH0Z1GJM5ND15JE","firstName":"Rupert","lastName":"Holmes","age":75,"verified":true,"location":{"longitude":-2.518,"latitude":53.259},"locationUpdated":"2022-01-01T12:00:00.000Z","skills":["singing","songwriting","playwriting"],"personalStatement":"I like piña coladas and taking walks in the rain."} <- rupert-holmes.json

A little messy, but if you don't see this, then it didn't work!

Now that we have some data, let's add another router to hold the search routes we want to add. Create a file named search-router.js in the routers folder and set it up with imports and exports just like we did in person-router.js:

import { Router } from 'express'

import { personRepository } from '../om/person.js'

export const router = Router()

Import the Router into server.js the same way we did for the personRouter:

/* import routers */

import { router as personRouter } from './routers/person-router.js'

import { router as searchRouter } from './routers/search-router.js'

Then add the searchRouter to the Express app:

/* bring in some routers */

app.use('/person', personRouter)

app.use('/persons', searchRouter)

Router bound, we can now add some routes.

Search all the things

We're going to add a plethora of searches to our new Router. But the first will be the easiest as it's just going to return everything. Go ahead and add the following code to search-router.js:

router.get('/all', async (req, res) => {

const persons = await personRepository.search().return.all()

res.send(persons)

})

Here we see how to start and finish a search. Searches start just like CRUD operations start—on a Repository. But instead of calling .createAndSave(), .fetch(), .save(), or .remove(), we call .search(). And unlike all those other methods, .search() doesn't end there. Instead, it allows you to build up a query (which you'll see in the next example) and then resolve it with a call to .return.all().

With this new route in place, go into the Swagger UI and exercise the /persons/all route. You should see all of the folks you added with the shell script as a JSON array.

In the example above, the query is not specified—we didn't build anything up. If you do this, you'll just get everything. Which is what you want sometimes. But not most of the time. It's not really searching if you just return everything. So let's add a route that lets us find persons by their last name. Add the following code:

router.get('/by-last-name/:lastName', async (req, res) => {

const lastName = req.params.lastName

const persons = await personRepository.search()

.where('lastName').equals(lastName).return.all()

res.send(persons)

})

In this route, we're specifying a field we want to filter on and a value that it needs to equal. The field name in the call to .where() is the name of the field specified in our schema. This field was defined as a string, which matters because the type of the field determines the methods that are available query it.

In the case of a string, there's just .equals(), which will query against the value of the entire string. This is aliased as .eq(), .equal(), and .equalTo() for your convenience. You can even add a little more syntactic sugar with calls to .is and .does that really don't do anything but make your code pretty. Like this:

const persons = await personRepository.search().where('lastName').is.equalTo(lastName).return.all()

const persons = await personRepository.search().where('lastName').does.equal(lastName).return.all()

You can also invert the query with a call to .not:

const persons = await personRepository.search().where('lastName').is.not.equalTo(lastName).return.all()

const persons = await personRepository.search().where('lastName').does.not.equal(lastName).return.all()

In all these cases, the call to .return.all() executes the query we build between it and the call to .search(). We can search on other field types as well. Let's add some routes to search on a number and a boolean field:

router.get('/old-enough-to-drink-in-america', async (req, res) => {

const persons = await personRepository.search()

.where('age').gte(21).return.all()

res.send(persons)

})

router.get('/non-verified', async (req, res) => {

const persons = await personRepository.search()

.where('verified').is.not.true().return.all()

res.send(persons)

})

The number field is filtering persons by age where the age is great than or equal to 21. Again, there are aliases and syntactic sugar:

const persons = await personRepository.search().where('age').is.greaterThanOrEqualTo(21).return.all()

But there are also more ways to query:

const persons = await personRepository.search().where('age').eq(21).return.all()

const persons = await personRepository.search().where('age').gt(21).return.all()

const persons = await personRepository.search().where('age').gte(21).return.all()

const persons = await personRepository.search().where('age').lt(21).return.all()

const persons = await personRepository.search().where('age').lte(21).return.all()

const persons = await personRepository.search().where('age').between(21, 65).return.all()

The boolean field is searching for persons by their verification status. It already has some of our syntactic sugar in it. Note that this query will match a missing value or a false value. That's why I specified .not.true(). You can also call .false() on boolean fields as well as all the variations of .equals.

const persons = await personRepository.search().where('verified').true().return.all()

const persons = await personRepository.search().where('verified').false().return.all()

const persons = await personRepository.search().where('verified').equals(true).return.all()

So, we've created a few routes and I haven't told you to test them. Maybe you have anyhow. If so, good for you, you rebel. For the rest of you, why don't you go ahead and test them now with Swagger? And, going forward, just test them when you want. Heck, create some routes of your own using the provided syntax and try those out too. Don't let me tell you how to live your life.

Of course, querying on just one field is never enough. Not a problem, Redis OM can handle .and() and .or() like in this route:

router.get('/verified-drinkers-with-last-name/:lastName', async (req, res) => {

const lastName = req.params.lastName

const persons = await personRepository.search()

.where('verified').is.true()

.and('age').gte(21)

.and('lastName').equals(lastName).return.all()

res.send(persons)

})

Here, I'm just showing the syntax for .and() but, of course, you can also use .or().

Full-text search

If you've defined a field with a type of text in your schema, you can perform full-text searches against it. The way a text field is searched is different from how a string is searched. A string can only be compared with .equals() and must match the entire string. With a text field, you can look for words within the string.

A text field is optimized for human-readable text, like an essay or song lyrices. It's pretty clever. It understands that certain words (like a, an, or the) are common and ignores them. It understands how words are grammatically similar and so if you search for give, it matches gives, given, giving, and gave too. And it ignores punctuation.

Let's add a route that does full-text search against our personalStatement field:

router.get('/with-statement-containing/:text', async (req, res) => {

const text = req.params.text

const persons = await personRepository.search()

.where('personalStatement').matches(text)

.return.all()

res.send(persons)

})

Note the use of the .matches() function. This is the only one that works with text fields. It takes a string that can be one or more words—space-delimited—that you want to quyery for. Let's try it out. In Swagger, use this route to search for the word "walk". You should get the following results:

[

{

"entityId": "01FYC7CTR027F219455PS76247",

"firstName": "Rupert",

"lastName": "Holmes",

"age": 75,

"verified": true,

"location": {

"longitude": -2.518,

"latitude": 53.259

},

"locationUpdated": "2022-01-01T12:00:00.000Z",

"skills": [

"singing",

"songwriting",

"playwriting"

],

"personalStatement": "I like piña coladas and taking walks in the rain."

},

{

"entityId": "01FYC7CTNBJD9CZKKWPQEZEW14",

"firstName": "Chris",

"lastName": "Stapleton",

"age": 43,

"verified": true,

"location": {

"longitude": -84.495,

"latitude": 38.03

},

"locationUpdated": "2022-01-01T12:00:00.000Z",

"skills": [

"singing",

"football",

"coal mining"

],

"personalStatement": "There are days that I can walk around like I'm alright. And I pretend to wear a smile on my face. And I could keep the pain from comin' out of my eyes. But sometimes, sometimes, sometimes I cry."

}

]

Notice how the word "walk" is matched for Rupert Holmes' personal statement that contains "walks" and matched for Chris Stapleton's that contains "walk". Now search "walk raining". You'll see that this returns Rupert's entry only even though the exact text of neither of these words is found in his personal statement. But they are grammatically related so it matched them. This is called stemming and it's a pretty cool feature of RediSearch that Redis OM exploits.

And if you search for "a rain walk" you'll still match Rupert's entry even though the word "a" is not in the text. Why? Because it's a common word that's not very helpful with searching. These common words are called stop words and this is another cool feature of RediSearch that Redis OM just gets for free.

Searching the globe

RediSearch, and therefore Redis OM, both support searching by geographic location. You specify a point in the globe, a radius, and the units for that radius and it'll gleefully return all the entities therein. Let's add a route to do just that:

router.get('/near/:lng,:lat/radius/:radius', async (req, res) => {

const longitude = Number(req.params.lng)

const latitude = Number(req.params.lat)

const radius = Number(req.params.radius)

const persons = await personRepository.search()

.where('location')

.inRadius(circle => circle

.longitude(longitude)

.latitude(latitude)

.radius(radius)

.miles)

.return.all()

res.send(persons)

})

This code looks a little different than the others because the way we define the circle we want to search is done with a function that is passed into the .inRadius method:

circle => circle.longitude(longitude).latitude(latitude).radius(radius).miles

All this function does is accept an instance of a Circle that has been initialized with default values. We override those values by calling various builder methods to define the origin of our search (i.e. the longitude and latitude), the radius, and the units that radius is measured in. Valid units are miles, meters, feet, and kilometers.

Let's try the route out. I know we can find Joan Jett at around longitude -75.0 and latitude 40.0, which is in eastern Pennsylvania. So use those coordinates with a radius of 20 miles. You should receive in response:

[

{

"entityId": "01FYC7CTPKYNXQ98JSTBC37AS1",

"firstName": "Joan",

"lastName": "Jett",

"age": 63,

"verified": false,

"location": {

"longitude": -75.273,

"latitude": 40.003

},

"locationUpdated": "2022-01-01T12:00:00.000Z",

"skills": [

"singing",

"guitar",

"black eyeliner"

],

"personalStatement": "I love rock n' roll so put another dime in the jukebox, baby."

}

]

Try widening the radius and see who else you can find.

Adding location tracking

We're getting toward the end of the tutorial here, but before we go, I'd like to add that location tracking piece that I mentioned way back in the beginning. This next bit of code should be easily understood if you've gotten this far as it's not really doing anything I haven't talked about already.

Add a new file called location-router.js in the routers folder:

import { Router } from 'express'

import { personRepository } from '../om/person.js'

export const router = Router()

router.patch('/:id/location/:lng,:lat', async (req, res) => {

const id = req.params.id

const longitude = Number(req.params.lng)

const latitude = Number(req.params.lat)

const locationUpdated = new Date()

const person = await personRepository.fetch(id)

person.location = { longitude, latitude }

person.locationUpdated = locationUpdated

await personRepository.save(person)

res.send({ id, locationUpdated, location: { longitude, latitude } })

})

Here we're calling .fetch() to fetch a person, we're updating some values for that person—the .location property with our longitude and latitude and the .locationUpdated property with the current date and time. Easy stuff.

To use this Router, import it in server.js:

/* import routers */

import { router as personRouter } from './routers/person-router.js'

import { router as searchRouter } from './routers/search-router.js'

import { router as locationRouter } from './routers/location-router.js'

And bind the router to a path:

/* bring in some routers */

app.use('/person', personRouter, locationRouter)

app.use('/persons', searchRouter)

And that's that. But this just isn't enough to satisfy. It doesn't show you anything new, except maybe the usage of a date field. And, it's not really location tracking. It just shows where these people last were, no history. So let's add some!.

To add some history, we're going to use a Redis Stream. Streams are a big topic but don't worry if you’re not familiar with them, you can think of them as being sort of like a log file stored in a Redis key where each entry represents an event. In our case, the event would be the person moving about or checking in or whatever.

But there's a problem. Redis OM doesn’t support Streams even though Redis Stack does. So how do we take advantage of them in our application? By using Node Redis. Node Redis is a low-level Redis client for Node.js that gives you access to all the Redis commands and data types. Internally, Redis OM is creating and using a Node Redis connection. You can use that connection too. Or rather, Redis OM can be told to use the connection you are using. Let me show you how.

Using Node Redis

Open up client.js in the om folder. Remember how we created a Redis OM Client and then called .open() on it?

const client = await new Client().open(url)

Well, the Client class also has a .use() method that takes a Node Redis connection. Modify client.js to open a connection to Redis using Node Redis and then .use() it:

import { Client } from 'redis-om'

import { createClient } from 'redis'

/* pulls the Redis URL from .env */

const url = process.env.REDIS_URL

/* create a connection to Redis with Node Redis */

export const connection = createClient({ url })

await connection.connect()

/* create a Client and bind it to the Node Redis connection */

const client = await new Client().use(connection)

export default client

And that's it. Redis OM is now using the connection you created. Note that we are exporting both the client and the connection. Got to export the connection if we want to use it in our newest route.

Storing location history with Streams

To add an event to a Stream we need to use the XADD command. Node Redis exposes that as .xAdd(). So, we need to add a call to .xAdd() in our route. Modify location-router.js to import our connection:

import { connection } from '../om/client.js'

And then in the route itself add a call to .xAdd():

...snip...

const person = await personRepository.fetch(id)

person.location = { longitude, latitude }

person.locationUpdated = locationUpdated

await personRepository.save(person)

let keyName = `${person.keyName}:locationHistory`

await connection.xAdd(keyName, '*', person.location)

...snip...

.xAdd() takes a key name, an event ID, and a JavaScript object containing the keys and values that make up the event, i.e. the event data. For the key name, we're building a string using the .keyName property that Person inherited from Entity (which will return something like Peson:01FYC7CTPKYNXQ98JSTBC37AS1) combined with a hard-coded value. We're passing in * for our event ID, which tells Redis to just generate it based on the current time and previous event ID. And we're passing in the location—with properties of longitude and latitude—as our event data.

Now, whenever this route is exercised, the longitude and latitude will be logged and the event ID will encode the time. Go ahead and use Swagger to move Joan Jett around a few times.

Now, go into RedisInsight and take a look at the Stream. You'll see it there in the list of keys but if you click on it, you'll get a message saying that "This data type is coming soon!". If you don't get this message, congratualtions, you live in the future! For us here in the past, we'll just issue the raw command instead:

XRANGE Person:01FYC7CTPKYNXQ98JSTBC37AS1:locationHistory - +

This tells Redis to get a range of values from a Stream stored in the given the key name—Person:01FYC7CTPKYNXQ98JSTBC37AS1:locationHistory in our example. The next values are the starting event ID and the ending event ID. - is the beginning of the Stream. + is the end. So this returns everything in the Stream:

1) 1) "1647536562911-0"

2) 1) "longitude"

2) "45.678"

3) "latitude"

4) "45.678"

2) 1) "1647536564189-0"

2) 1) "longitude"

2) "45.679"

3) "latitude"

4) "45.679"

3) 1) "1647536565278-0"

2) 1) "longitude"

2) "45.680"

3) "latitude"

4) "45.680"

And just like that, we're tracking Joan Jett.

Wrap-up

So, now you know how to use Express + Redis OM to build an API backed by Redis Stack. And, you've got yourself some pretty decent started code in the process. Good deal! If you want to learn more, you can check out the documentation for Redis OM. It covers the full breadth of Redis OM's capabilities.

And thanks for taking the time to work through this. I sincerly hope you found it useful. If you have any questions, the Redis Discord server is by far the best place to get them answered. Join the server and ask away!

1.3.3 - Redis OM Python

Learn how to build with Redis Stack and Python

Redis OM Python is a Redis client that provides high-level abstractions for managing document data in Redis. This tutorial shows you how to get up and running with Redis OM Python, Redis Stack, and the Flask micro-framework.

We'd love to see what you build with Redis Stack and Redis OM. Join the Redis community on Discord to chat with us about all things Redis OM and Redis Stack. Read more about Redis OM Python our announcement blog post.

Overview

This application, an API built with Flask and a simple domain model, demonstrates common data manipulation patterns using Redis OM.

Our entity is a Person, with the following JSON representation:

{

"first_name": "A string, the person's first or given name",

"last_name": "A string, the person's last or surname",

"age": 36,

"address": {

"street_number": 56,

"unit": "A string, optional unit number e.g. A or 1",

"street_name": "A string, name of the street they live on",

"city": "A string, name of the city they live in",

"state": "A string, state, province or county that they live in",

"postal_code": "A string, their zip or postal code",

"country": "A string, country that they live in."

},

"personal_statement": "A string, free text personal statement",

"skills": [

"A string: a skill the person has",

"A string: another still that the person has"

]

}

We'll let Redis OM handle generation of unique IDs, which it does using ULIDs. Redis OM will also handle creation of unique Redis key names for us, as well as saving and retrieving entities from JSON documents stored in a Redis Stack database.

Getting Started

Requirements

To run this application you'll need:

Get the Source Code

Clone the repository from GitHub:

$ git clone https://github.com/redis-developer/redis-om-python-flask-skeleton-app.git

$ cd redis-om-python-flask-skeleton-app

Next, we'll get a Redis Stack database up and running. If you're using Docker:

$ docker-compose up -d

Creating network "redis-om-python-flask-skeleton-app_default" with the default driver

Creating redis_om_python_flask_starter ... done

If you're using Redis Enterprise Cloud, you'll need the hostname, port number, and password for your database. Use these to set the REDIS_OM_URL environment variable like this:

$ export REDIS_OM_URL=redis://default:<password>@<host>:<port>

(This step is not required when working with Docker as the Docker container runs Redis on localhost port 6379 with no password, which is the default connection that Redis OM uses.)

For example if your Redis Enterprise Cloud database is at port 9139 on host enterprise.redis.com and your password is 5uper53cret then you'd set REDIS_OM_URL as follows:

$ export REDIS_OM_URL=redis://default:5uper53cret@enterprise.redis.com:9139

Create a Python Virtual Environment and Install the Dependencies

Create a Python virtual environment, and install the project dependencies which are Flask, Requests (used only in the data loader script) and Redis OM:

$ python3 -m venv venv

$ . ./venv/bin/activate

$ pip install -r requirements.txt

Start the Flask Application

Let's start the Flask application in development mode, so that Flask will restart the server for you each time you save code changes in app.py:

$ export FLASK_ENV=development

$ flask run

If all goes well, you should see output similar to this:

$ flask run

* Environment: development

* Debug mode: on

* Running on http://127.0.0.1:5000/ (Press CTRL+C to quit)

* Restarting with stat

* Debugger is active!

* Debugger PIN: XXX-XXX-XXX

You're now up and running, and ready to perform CRUD operations on data with Redis, RediSearch, RedisJSON and Redis OM for Python! To make sure the server's running, point your browser at http://127.0.0.1:5000/, where you can expect to see the application's basic home page:

Load the Sample Data

We've provided a small amount of sample data (it's in data/people.json. The Python script dataloader.py loads each person into Redis by posting the data to the application's create a new person endpoint. Run it like this:

$ python dataloader.py

Created person Robert McDonald with ID 01FX8RMR7NRS45PBT3XP9KNAZH

Created person Kareem Khan with ID 01FX8RMR7T60ANQTS4P9NKPKX8

Created person Fernando Ortega with ID 01FX8RMR7YB283BPZ88HAG066P

Created person Noor Vasan with ID 01FX8RMR82D091TC37B45RCWY3

Created person Dan Harris with ID 01FX8RMR8545RWW4DYCE5MSZA1

Make sure to take a copy of the output of the data loader, as your IDs will differ from those used in the tutorial. To follow along, substitute your IDs for the ones shown above. e.g. whenever we are working with Kareem Khan, change 01FX8RMR7T60ANQTS4P9NKPKX8 for the ID that your data loader assiged to Kareem in your Redis database.

Problems?

If the Flask server fails to start, take a look at its output. If you see log entries similar to this:

raise ConnectionError(self._error_message(e))

redis.exceptions.ConnectionError: Error 61 connecting to localhost:6379. Connection refused.

then you need to start the Redis Docker container if using Docker, or set the REDIS_OM_URL environment variable if using Redis Enterprise Cloud.

If you've set the REDIS_OM_URL environment variable, and the code errors with something like this on startup:

raise ConnectionError(self._error_message(e))

redis.exceptions.ConnectionError: Error 8 connecting to enterprise.redis.com:9139. nodename nor servname provided, or not known.

then you'll need to check that you used the correct hostname, port, password and format when setting REDIS_OM_URL.

If the data loader fails to post the sample data into the application, make sure that the Flask application is running before running the data loader.

Create, Read, Update and Delete Data

Let's create and manipulate some instances of our data model in Redis. Here we'll look at how to call the Flask API with curl (you could also use Postman), how the code works, and how the data's stored in Redis.

Building a Person Model with Redis OM

Redis OM allows us to model entities using Python classes, and the Pydantic framework. Our person model is contained in the file person.py. Here's some notes about how it works:

- We declare a class

Person which extends a Redis OM class JsonModel. This tells Redis OM that we want to store these entities in Redis as JSON documents. - We then declare each field in our model, specifying the data type and whether or not we want to index on that field. For example, here's the

age field, which we've declared as a positive integer that we want to index on:

age: PositiveInt = Field(index=True)

- The

skills field is a list of strings, declared thus:

skills: List[str] = Field(index=True)

- For the

personal_statement field, we don't want to index on the field's value, as it's a free text sentence rather than a single word or digit. For this, we'll tell Redis OM that we want to be able to perform full text searches on the values:

personal_statement: str = Field(index=True, full_text_search=True)

address works differently from the other fields. Note that in our JSON representation of the model, address is an object rather than a string or numerical field. With Redis OM, this is modeled as a second class, which extends the Redis OM EmbeddedJsonModel class:

class Address(EmbeddedJsonModel):

# field definitions...

Fields in an EmbeddedJsonModel are defined in the same way, so our class contains a field definition for each data item in the address.

Not every field in our JSON is present in every address, Redis OM allows us to declare a field as optional so long as we don't index it:

unit: Optional[str] = Field(index=False)

- We can also set a default value for a field... let's say country should be "United Kingdom" unless otherwise specified:

country: str = Field(index=True, default="United Kingdom")

- Finally, to add the embedded address object to our Person model, we declare a field of type

Address in the Person class:

Adding New People

The function create_person in app.py handles the creation of a new person in Redis. It expects a JSON object that adheres to our Person model's schema. The code to then create a new Person object with that data and save it in Redis is simple:

new_person = Person(**request.json)

new_person.save()

return new_person.pk

When a new Person instance is created, Redis OM assigns it a unique ULID primary key, which we can access as .pk. We return that to the caller, so that they know the ID of the object they just created.

Persisting the object to Redis is then simply a matter of calling .save() on it.

Try it out... with the server running, add a new person using curl:

curl --location --request POST 'http://127.0.0.1:5000/person/new' \

--header 'Content-Type: application/json' \

--data-raw '{

"first_name": "Joanne",

"last_name": "Peel",

"age": 36,

"personal_statement": "Music is my life, I love gigging and playing with my band.",

"address": {

"street_number": 56,

"unit": "4A",

"street_name": "The Rushes",

"city": "Birmingham",

"state": "West Midlands",

"postal_code": "B91 6HG",

"country": "United Kingdom"

},

"skills": [

"synths",

"vocals",

"guitar"

]

}'

Running the above curl command will return the unique ULID ID assigned to the newly created person. For example 01FX8SSSDN7PT9T3N0JZZA758G.

Examining the data in Redis

Let's take a look at what we just saved in Redis. Using RedisInsight or redis-cli, connect to the database and look at the value stored at key :person.Person:01FX8SSSDN7PT9T3N0JZZA758G. This is stored as a JSON document in Redis, so if using redis-cli you'll need the following command:

$ redis-cli

127.0.0.1:6379> json.get :person.Person:01FX8SSSDN7PT9T3N0JZZA758G

If you're using RedisInsight, the browser will render the key value for you when you click on the key name:

When storing data as JSON in Redis, we can update and retrieve the whole document, or just parts of it. For example, to retrieve only the person's address and first skill, use the following command (RedisInsight users should use the built in redis-cli for this):

$ redis-cli

127.0.0.1:6379> json.get :person.Person:01FX8SSSDN7PT9T3N0JZZA758G $.address $.skills[0]

"{\"$.skills[0]\":[\"synths\"],\"$.address\":[{\"pk\":\"01FX8SSSDNRDSRB3HMVH00NQTT\",\"street_number\":56,\"unit\":\"4A\",\"street_name\":\"The Rushes\",\"city\":\"Birmingham\",\"state\":\"West Midlands\",\"postal_code\":\"B91 6HG\",\"country\":\"United Kingdom\"}]}"

For more information on the JSON Path syntax used to query JSON documents in Redis, see the RedisJSON documentation.

Find a Person by ID

If we know a person's ID, we can retrieve their data. The function find_by_id in app.py receives an ID as its parameter, and asks Redis OM to retrieve and populate a Person object using the ID and the Person .get class method:

try:

person = Person.get(id)

return person.dict()

except NotFoundError:

return {}

The .dict() method converts our Person object to a Python dictionary that Flask then returns to the caller.

Note that if there is no Person with the supplied ID in Redis, get will throw a NotFoundError.

Try this out with curl, substituting 01FX8SSSDN7PT9T3N0JZZA758G for the ID of a person that you just created in your database:

curl --location --request GET 'http://localhost:5000/person/byid/01FX8SSSDN7PT9T3N0JZZA758G'

The server responds with a JSON object containing the user's data:

{

"address": {

"city": "Birmingham",

"country": "United Kingdom",

"pk": "01FX8SSSDNRDSRB3HMVH00NQTT",

"postal_code": "B91 6HG",

"state": "West Midlands",

"street_name": "The Rushes",

"street_number": 56,

"unit": null

},

"age": 36,

"first_name": "Joanne",

"last_name": "Peel",

"personal_statement": "Music is my life, I love gigging and playing with my band.",

"pk": "01FX8SSSDN7PT9T3N0JZZA758G",

"skills": [

"synths",

"vocals",

"guitar"

]

}

Find People with Matching First and Last Name

Let's find all the people who have a given first and last name... This is handled by the function find_by_name in app.py.

Here, we're using Person's find class method that's provided by Redis OM. We pass it a search query, specifying that we want to find people whose first_name field contains the value of the first_name parameter passed to find_by_name AND whose last_name field contains the value of the last_name parameter:

people = Person.find(

(Person.first_name == first_name) &

(Person.last_name == last_name)

).all()

.all() tells Redis OM that we want to retrieve all matching people.

Try this out with curl as follows:

curl --location --request GET 'http://127.0.0.1:5000/people/byname/Kareem/Khan'

Note: First and last name are case sensitive.

The server responds with an object containing results, an array of matches:

{

"results": [

{

"address": {

"city": "Sheffield",

"country": "United Kingdom",

"pk": "01FX8RMR7THMGA84RH8ZRQRRP9",

"postal_code": "S1 5RE",

"state": "South Yorkshire",

"street_name": "The Beltway",

"street_number": 1,

"unit": "A"

},

"age": 27,

"first_name": "Kareem",

"last_name": "Khan",

"personal_statement":"I'm Kareem, a multi-instrumentalist and singer looking to join a new rock band.",

"pk":"01FX8RMR7T60ANQTS4P9NKPKX8",

"skills": [

"drums",

"guitar",

"synths"

]

}

]

}

Find People within a Given Age Range

It's useful to be able to find people that fall into a given age range... the function find_in_age_range in app.py handles this as follows...